Ethical artificial intelligence in pharmacovigilance represents the intersection of cutting-edge technology and fundamental human values—ensuring that AI systems deployed to protect patient safety operate with fairness, transparency, accountability, and respect for individual rights. As AI increasingly automates critical decisions in adverse event detection, causality assessment, and safety signal prioritization, the question is no longer whether AI should be used, but rather how to ensure its deployment aligns with ethical principles that safeguard vulnerable populations and maintain public trust in drug safety systems.

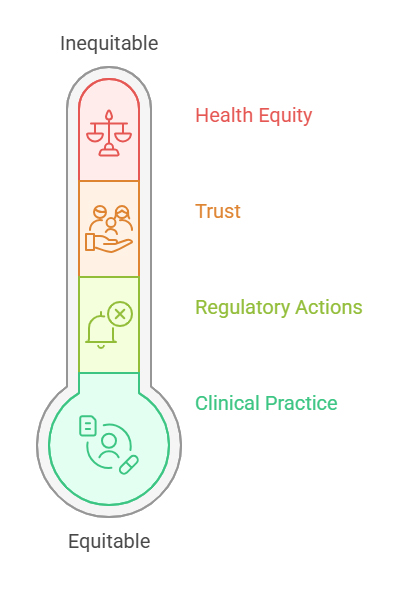

The stakes are extraordinarily high. Most AI algorithms require large datasets for training, but several groups of the human population are absent or misrepresented in existing biomedical datasets. When training data is misrepresentative of population variability, AI is prone to reinforcing bias, which can lead to fatal outcomes, misdiagnoses, and a lack of generalization. This challenge manifests acutely in pharmacovigilance, where adverse event databases historically underrepresent specific demographic groups—women, racial and ethnic minorities, pediatric populations, and patients from low- and middle-income countries.

Recent evidence quantifies the scope of algorithmic bias in healthcare AI systems. Many datasets used to train AI models for clinical tasks overrepresent non-Hispanic Caucasian patients relative to the general population, and more than half of all published clinical AI models leverage datasets from either the United States or China. When algorithms trained on these imbalanced datasets are deployed for pharmacovigilance, they systematically underestimate adverse event risks for underrepresented populations. An underestimating algorithm will forgo informative predictions in underrepresented groups—especially on outlier cases—in favor of approximating mean trends to avoid overfitting.

The World Health Organization recognizes that social determinants of health can account for up to 55% of health outcomes, yet these very factors become sources of algorithmic bias when included in AI tools without careful ethical oversight. Algorithms may predict lower health risks for populations that have historically had less access to healthcare services, not because they are healthier, but because there is less documented healthcare usage. In pharmacovigilance contexts, this means AI systems might systematically fail to flag safety signals in communities already marginalized by healthcare inequities—compounding existing disparities rather than ameliorating them.

The governance challenge is equally urgent. The OECD reported in 2024 that AI in healthcare grew from an estimated value of tens of billions to projected values of $188 billion, largely without systematic regulation or oversight. This rapid, unregulated expansion has prompted coordinated international responses. The EU AI Act, which entered into force in August 2024, classifies healthcare AI systems—including those used in pharmacovigilance—as “high-risk,” mandating strict requirements for risk management, data governance, human oversight, and nondiscrimination. Similarly, the FDA’s 2025 guidance on AI credibility assessment introduces rigorous transparency and accountability standards for AI systems supporting regulatory decisions about drug safety.

Ethical AI in pharmacovigilance is not an abstract aspiration—it’s an operational imperative enforced through regulatory frameworks, validated through empirical evidence, and measured by its impact on health equity. This blog examines how ethical principles translate into practice, why governance frameworks matter, and what pharmaceutical companies, contract research organizations, and regulators must do to ensure AI serves all patients fairly.

Bias and Fairness: How Data Imbalance Impacts Safety Signal Detection

Bias in AI-driven pharmacovigilance manifests at multiple stages—from data collection and feature selection to model training, validation, and deployment. Understanding these mechanisms is essential for implementing effective mitigation strategies.

Adverse event databases like FAERS (FDA Adverse Event Reporting System), EudraVigilance, and VigiBase aggregate reports from clinicians, patients, and pharmaceutical companies. However, reporting patterns reflect systemic healthcare inequities:

Underreporting from Marginalized Communities: Patients from minority ethnic groups, those with limited healthcare access, non-English speakers, and individuals in rural or low-resource settings are less likely to have adverse events documented or reported to pharmacovigilance systems. When AI models train on this incomplete data, they learn to associate adverse events primarily with populations that report most frequently—typically well-resourced healthcare systems serving predominantly white, urban populations.

Measurement Bias in Clinical Data: Electronic health records and claims databases—increasingly used as data sources for AI-powered pharmacovigilance surveillance—exhibit differential data quality across demographic groups. Clinical parameters may be measured less frequently or with different diagnostic criteria for certain populations. For instance, pulse oximetry measurements show racial bias in accuracy, leading to underestimation of hypoxia in patients with darker skin—a measurement bias that can propagate through AI systems relying on these clinical indicators.

Label Bias in Training Data: Causality assessments (whether an adverse event is attributable to a drug) historically made by human reviewers may themselves contain implicit biases. If an algorithm is trained on imbalanced data, this can lead to worse performance and algorithm underestimation for underrepresented groups. When these biased historical assessments become ground truth labels for AI training, the algorithms perpetuate and potentially amplify existing prejudices.

The consequences of biased AI in pharmacovigilance are not theoretical. Consider several documented failure modes:

Differential Signal Detection: An AI model trained predominantly on adverse event reports from North American and European populations may fail to detect safety signals that manifest differently in Asian, African, or Latin American populations due to genetic polymorphisms affecting drug metabolism, different background disease prevalence, or distinct patterns of polypharmacy and drug-drug interactions.

Severity Misclassification: AI systems that automate seriousness assessments (whether an adverse event meets regulatory criteria for “serious”—death, hospitalization, disability, etc.) may systematically underestimate severity for demographic groups underrepresented in training data. This creates two compounding harms: the AI fails to prioritize these cases for urgent review, and aggregate safety signal detection algorithms miss patterns because individual cases are incorrectly categorized.

False Negative Rates: Most critically, biased AI increases false negative rates—missed safety signals—for vulnerable populations. Research from world-renowned institutions provides clear, measurable evidence of how these systems can fail, with disproportionate harm to women and ethnic minorities. A University of Florida study found that an AI tool for diagnosing bacterial vaginosis achieved the highest accuracy for white women and lowest for Asian women, with Hispanic women receiving the most false positives. Similarly, skin cancer detection algorithms trained predominantly on light-skinned individuals demonstrate significantly lower diagnostic accuracy for patients with darker skin—a critical failure given that Black patients already have the highest mortality rate for melanoma.

In pharmacovigilance, equivalent failures mean drugs may remain on the market despite causing serious adverse reactions in specific populations—reactions that AI-driven surveillance systems failed to detect because training data didn’t adequately represent those groups.

The insidious nature of AI bias in pharmacovigilance is its self-reinforcing cycle. When AI systems systematically underdetect safety signals in marginalized populations:

Without statistically or clinically meaningful predictions for certain groups, any downstream clinical benefits of the AI model are limited to only the largest groups with sufficient data sizes. The benefits and clinical improvements that result would be similarly constrained, perpetuating healthcare disparities.

Achieving fairness in pharmacovigilance AI requires proactive measures throughout the AI lifecycle:

Diversify Training Data: Actively recruit adverse event reports from underrepresented populations through targeted outreach, multilingual reporting interfaces, and partnerships with healthcare systems serving diverse communities. Apply statistical techniques like oversampling, synthetic minority oversampling (SMOTE), or transfer learning to address class imbalances.

Implement Fairness Metrics: Beyond overall accuracy, evaluate AI model performance across demographic subgroups using metrics like disparate impact ratios, equalized odds, and calibration by subgroup. Establish minimum acceptable performance thresholds for all populations, not just aggregate performance.

Conduct Bias Audits: Regularly test AI systems for bias using standardized protocols. The CIOMS Working Group XIV recommends risk assessments that explicitly evaluate whether AI systems introduce or perpetuate discriminatory outcomes.

Apply Algorithmic Fairness Techniques: Utilize pre-processing methods (reweighting training data), in-processing methods (incorporating fairness constraints into model optimization), or post-processing methods (adjusting decision thresholds by subgroup) to mitigate detected biases.

Human Oversight for High-Stakes Decisions: The EU AI Act requires that high-risk AI systems be subject to appropriate human oversight, with practical models such as human-in-the-loop, human-on-the-loop, and human-in-command. For pharmacovigilance decisions with direct patient safety implications, human experts should validate AI recommendations, particularly for cases involving underrepresented populations or edge cases.

Fairness is not a one-time achievement but an ongoing commitment requiring continuous monitoring, iterative refinement, and organizational accountability.

Transparency and accountability form the operational backbone of ethical AI in pharmacovigilance, translating abstract principles into concrete practices that support regulatory compliance, clinical trust, and patient safety.

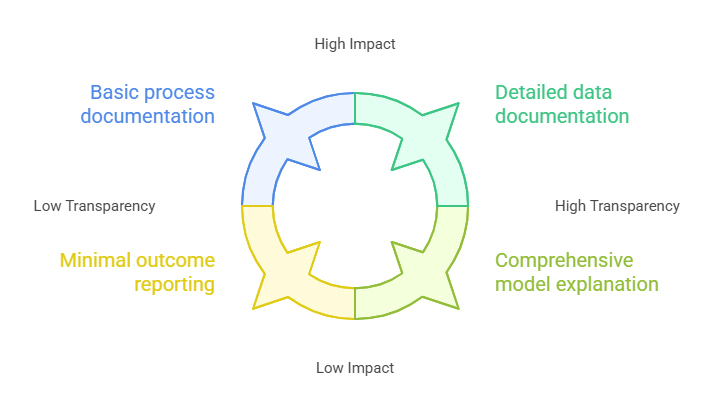

Transparency in pharmacovigilance AI operates at multiple levels:

Model Transparency: Stakeholders—medical reviewers, regulators, patients—must understand how AI systems reach conclusions. The EMA’s Reflection Paper emphasizes that active measures must be taken to avoid integration of bias into AI/ML applications and promote AI trustworthiness, with use of AI/ML in the medicinal product lifecycle always occurring in compliance with existing legal requirements, considering ethics and its underlying principles, and with due respect for fundamental rights.

Process Transparency: Organizations must document when and how AI is deployed in pharmacovigilance workflows. This includes specifying which tasks are automated, what human oversight mechanisms apply, and how AI outputs integrate with traditional pharmacovigilance processes. The CIOMS Working Group XIV emphasizes that declaring when and how AI solutions are used is critical for building trust among stakeholders.

Data Transparency: The provenance, quality, and representativeness of training data must be documented. Regulators increasingly require detailed information about data sources, preprocessing steps, inclusion/exclusion criteria, and demographic composition to assess whether AI systems can generalize appropriately to target populations.

Outcome Transparency: AI performance metrics—not just overall accuracy but fairness metrics, false positive/negative rates by subgroup, and edge case handling—should be transparently reported to stakeholders.

While the concept of “explainability” of an AI model is generally hailed as a “gold standard” for ensuring fairness, accountability, and prevention of bias, the EMA’s AI Reflection Paper accepts that some types of modeling architectures and the use of “black box” models necessarily mean that it will not always be possible to fully explain all aspects. Nevertheless, the trajectory is clear: regulatory acceptance increasingly depends on explainability.

Techniques like SHAP (Shapley Additive exPlanations) and LIME (Local Interpretable Model-Agnostic Explanations) provide post-hoc interpretability for complex models, revealing which features most influenced predictions. For a pharmacovigilance AI system flagging a drug-adverse event pair as a potential safety signal, SHAP analysis might reveal the contribution of patient age, concomitant medications, temporal relationships, or specific adverse event characteristics—enabling medical reviewers to validate whether the AI’s reasoning aligns with pharmacological plausibility.

Attention mechanisms in natural language processing models—increasingly used to process unstructured adverse event narratives—provide inherent explainability by highlighting which portions of text the model focused on when making classifications. This transparency enables quality assurance teams to identify when AI systems latch onto spurious correlations or when data quality issues drive predictions.

The EU AI Act mandates human oversight for high-risk AI systems, but the CIOMS Draft Report operationalizes this requirement with practical frameworks:

Human-in-the-Loop (HITL): AI provides recommendations, but a human expert reviews and approves each decision before action is taken. This model suits high-stakes pharmacovigilance tasks like causality assessment, where AI can accelerate analysis but medical judgment remains essential for final determination.

Human-on-the-Loop (HOTL): AI makes decisions autonomously within defined parameters, but human overseers monitor performance, intervene when necessary, and periodically audit outcomes. This model applies to tasks like adverse event report triage, where AI prioritizes cases for urgent review but human experts spot-check accuracy and investigate anomalies.

Human-in-Command (HIC): Humans retain ultimate authority and can override AI decisions at any time. AI operates as a decision support tool, not a decision-making agent. This model provides maximum accountability while leveraging AI’s capabilities for pattern recognition and efficiency gains.

The CIOMS Draft Report provides concrete examples of how life sciences companies can implement, monitor, and adapt oversight models over time, ensuring that human agency and accountability are maintained in line with both regulatory and ethical expectations.

The appropriate oversight model varies based on task-specific risk assessments. The FDA’s guidance emphasizes evaluating not only the reliability and credibility of an AI model but also assessing the human element charged with oversight—ensuring reviewers have adequate training, tools, and authority to exercise meaningful oversight rather than rubber-stamping AI outputs.

Comprehensive audit trails serve multiple functions in ethical AI pharmacovigilance:

Regulatory Inspections: When health authorities audit a pharmaceutical company’s pharmacovigilance system, they must be able to trace how AI-generated insights influenced safety decisions. This requires documentation of model versions, training data characteristics, validation results, and human review processes at the time specific decisions were made.

Root Cause Analysis: When AI systems fail—missing a safety signal, generating false positives that divert resources, or exhibiting bias—detailed audit trails enable investigation of what went wrong. Was it a data quality issue? Model drift as real-world data diverged from training distributions? Inadequate human oversight? Without robust documentation, systematic improvement becomes impossible.

Accountability Attribution: Clear audit trails establish who is responsible when errors occur. The current expectation to keep an audit history and detailed record of every change creates challenges, particularly for continuously learning AI systems. However, alternative assurance methodologies can demonstrate that AI is working as intended without creating unmanageable data storage burdens—for instance, through performance monitoring dashboards, automated fairness audits, and risk-based sampling rather than exhaustive logging.

Knowledge Management: As AI systems evolve through updates and retraining, audit trails preserve institutional knowledge about why specific design decisions were made, which populations required special attention, and what validation challenges were encountered—facilitating continuity as teams change and supporting organizational learning.

Technology alone cannot ensure ethical AI. Organizations must cultivate cultures where:

The CIOMS Draft Report recommends the establishment of cross-functional governance bodies, assignment of roles and responsibilities throughout the AI lifecycle, and regular review of compliance with guiding principles, providing tools such as a governance framework grid to help organizations document actions, manage change, and ensure traceability.

Transparency and accountability are not mere buzzwords—they are operational requirements that differentiate ethical AI implementations from reckless automation. They transform AI from a potential liability into a trustworthy partner in the mission to protect patient safety.

Compliance and Governance: OECD AI Principles and the EU AI Act

The global regulatory landscape for AI in pharmacovigilance is rapidly maturing, converging around core principles while allowing jurisdictional flexibility in implementation. Two frameworks stand out for their comprehensive scope and influence: the OECD AI Principles and the EU AI Act.

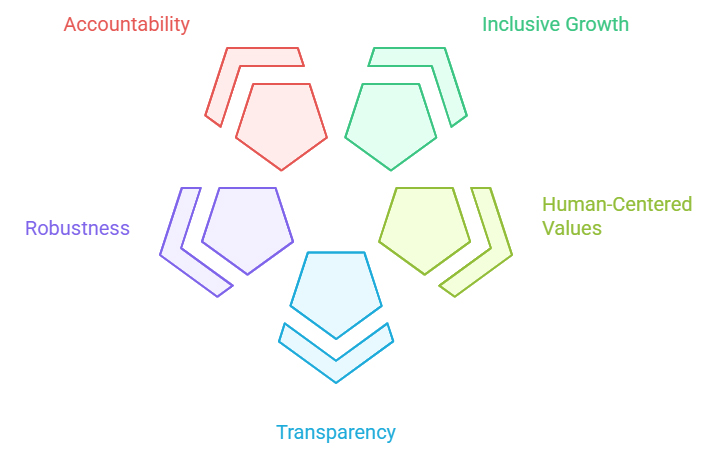

The Organization for Economic Co-operation and Development (OECD) established the first intergovernmental standard for AI in 2019, subsequently updated in 2024 to address general-purpose and generative AI. Now endorsed by 47 jurisdictions, including the European Union, the OECD AI Principles provide a universal blueprint for navigating AI’s complexities in policy frameworks globally.

The five values-based principles for responsible stewardship of trustworthy AI are:

The OECD also provides five recommendations for policymakers, including investing in AI research and development, fostering inclusive AI ecosystems, enabling agile governance environments, building human capacity, and advancing international cooperation. In January 2024, the OECD and its health ministers identified an action plan to enable responsible AI in healthcare, including adopting a nimble approach to regulating and overseeing AI in health, establishing public-private and person-provider partnerships, elevating health data governance, and engaging stakeholders.

The EU Artificial Intelligence Act, which entered into force on August 1, 2024, represents the world’s first comprehensive legal framework specifically for AI. The AI Act aims to promote human-centered and trustworthy AI while protecting the health, safety, and fundamental rights of individuals from the potentially harmful effects of AI-enabled systems.

Risk-Based Classification System

The AI Act categorizes AI systems into four risk levels:

Requirements for High-Risk Pharmacovigilance AI

Compliance requirements for high-risk systems include establishing a comprehensive risk management framework, ensuring data quality, integrity, and security, maintaining detailed records of the AI system’s functionality and compliance, providing clear information about the AI system’s capabilities and limitations, and implementing measures to ensure appropriate human control and intervention.

Specifically for pharmacovigilance applications:

Risk Management: Organizations must conduct risk assessments throughout the AI lifecycle, identifying potential harms to patient safety, documenting mitigation measures, and establishing monitoring systems to detect emerging risks. The EMA’s Reflection Paper introduces terms such as “high patient risk” for systems affecting patient safety and “high regulatory impact” for cases where the impact on regulatory decision-making is substantial, recommending a risk-based approach to development, deployment, and performance monitoring.

Data Governance: The CIOMS Draft Report operationalizes these goals by advising on the selection and evaluation of training and test datasets to ensure representativeness and implementation of mitigation strategies for identified biases. This includes documenting data provenance, implementing quality control processes, and ensuring that training data adequately represent target populations.

Technical Documentation: Detailed records must document model architecture, training procedures, validation results, performance metrics (overall and by subgroup), intended use cases, limitations, and version control. This documentation supports regulatory submissions and inspections.

Human Oversight: The Draft Report expands on EU AI Act requirements by defining practical models of oversight—such as human-in-the-loop, human-on-the-loop, and human-in-command—and mapping these to specific PV tasks.

Nondiscrimination: The Act explicitly requires AI systems to avoid discriminatory outcomes. For pharmacovigilance, this mandates fairness audits, bias mitigation strategies, and performance parity across demographic groups.

Timeline for Compliance

Most AI Act obligations apply 24 months after entry into force (August 2026), though prohibitions took effect within six months, and general-purpose AI requirements apply within 12 months. Rules on general-purpose AI systems, high-risk AI systems, conformity assessment bodies, and governance structures will apply just 12 months after entry into force, likely already in 2025. Organizations deploying pharmacovigilance AI must ensure compliance by these deadlines or face substantial penalties—up to €35 million or 7% of global annual turnover for prohibited AI use.

While the EU AI Act provides the most comprehensive legal framework, similar principles are emerging globally:

FDA Approach: The FDA’s January 2025 draft guidance on AI credibility assessment aligns with EU AI Act principles, emphasizing risk-based evaluation, transparency, and human oversight. The FDA’s Good Machine Learning Practice (GMLP) principles, developed jointly with Health Canada and UK MHRA, provide a complementary technical framework.

ISO/IEC Standards: International standards organizations are developing ISO/IEC TS 6254 on explainability objectives and approaches, ISO/IEC 42001 for AI management systems, and sector-specific standards that will inform future regulatory guidance.

CIOMS Framework: The Council for International Organizations of Medical Sciences Working Group XIV Draft Report translates global AI requirements—such as those in the EU AI Act—into practical guidance for pharmacovigilance, helping organizations implement AI systems that are legally compliant, scientifically robust, and ethically sound while supporting harmonization across regions.

Organizations seeking to deploy ethical AI in pharmacovigilance should:

The convergence of OECD principles, EU AI Act requirements, FDA guidance, and industry frameworks creates a coherent global direction for ethical AI in pharmacovigilance. Organizations that proactively align with these standards position themselves as leaders in responsible innovation while mitigating compliance risks.

Consider a realistic scenario that illuminates the ethical complexities of AI in pharmacovigilance:

A multinational pharmaceutical company deploys an AI system to automate causality assessments—determining whether reported adverse events are attributable to their marketed drugs. The system uses machine learning trained on 15 years of historical Individual Case Safety Reports (ICSRs), achieving 92% agreement with expert medical reviewers in validation testing. After six months of production use, the AI dramatically accelerates case processing, reducing review time from an average of 45 minutes to 8 minutes per case while maintaining high overall accuracy.

However, a senior medical reviewer notices an unusual pattern: cases involving elderly patients (age 75+) with complex polypharmacy regimens seem disproportionately classified as “unrelated” or “unlikely related” to the company’s drugs, even when clinical narratives suggest plausible causality. Further investigation reveals that the AI system systematically underestimates causality likelihood for this demographic compared to younger patients with simpler medication histories.

Several competing tensions emerge:

Efficiency vs. Accuracy: The AI delivers enormous efficiency gains, enabling the pharmacovigilance team to process 70% more cases with existing resources. Reverting to manual review would create processing backlogs, delaying signal detection for the broader population. However, the accuracy disparity for elderly polypharmacy patients is serious.