In the evolving landscape of drug safety, the quality of pharmacovigilance data has become more critical than ever. As regulatory agencies worldwide tighten their expectations and pharmaceutical companies face increasing volumes of adverse event reports from diverse sources, the margin for error continues to shrink.

Poor-quality data in pharmacovigilance can lead to missed safety signals, delayed regulatory responses, and ultimately, compromised patient safety. With the integration of artificial intelligence, real-world evidence, and global harmonization efforts, 2025 marks a pivotal year where data accuracy and completeness are not just regulatory requirements but strategic imperatives for pharmaceutical organizations.

The stakes have never been higher. A single incomplete case report or inaccurate data point can cascade into significant compliance issues, regulatory sanctions, and public health risks.

Data quality in pharmacovigilance encompasses four fundamental dimensions that determine the reliability and utility of safety information. Understanding these dimensions is essential for building robust drug safety systems that meet global regulatory standards.

Accuracy refers to the correctness and precision of data elements within adverse event reports. This includes proper medical coding, correct dosing information, accurate patient demographics, and precise chronological sequences of events.

Completeness ensures that all required data elements are present and sufficiently detailed to support regulatory decision-making. Complete case reports contain adequate information about the patient, product, adverse event, and reporter to enable proper causality assessment.

Timeliness involves capturing and reporting safety information within prescribed regulatory timeframes. This dimension has become increasingly complex as reporting windows vary globally and real-world data sources provide continuous streams of information.

Consistency maintains uniform data standards across different sources, systems, and geographical regions. Consistent data enables meaningful aggregation and analysis across diverse pharmacovigilance databases.

The World Health Organization defines pharmacovigilance data quality as information that is “fit for purpose” and supports effective signal detection and risk management activities. The WHO emphasizes that quality data should enable healthcare professionals and regulators to make informed decisions about drug safety.

The International Council for Harmonisation (ICH) guidelines, particularly ICH E2B(R3), establish technical specifications for data quality in individual case safety reports. These guidelines mandate specific data elements and formatting requirements to ensure global interoperability and regulatory compliance.

Both organizations stress that data quality is not merely a technical requirement but a patient safety imperative that underpins the entire pharmacovigilance ecosystem.

High-quality pharmacovigilance data serves as the foundation for multiple critical functions within drug safety operations. The impact of data quality extends far beyond regulatory compliance, influencing every aspect of risk management and patient protection.

Signal Detection relies entirely on accurate and complete data to identify potential safety concerns. Incomplete adverse event reports can mask important safety signals, while inaccurate data can generate false signals that waste resources and delay identification of real risks.

Risk Management decisions depend on reliable data to assess the benefit-risk profile of pharmaceutical products. Poor-quality data can lead to inappropriate risk mitigation strategies, unnecessary market withdrawals, or inadequate safety measures.

Regulatory Compliance requires adherence to strict data quality standards across global jurisdictions. Regulators increasingly scrutinize data quality during inspections, and deficiencies can result in significant penalties and operational restrictions.

Patient Safety represents the ultimate stakeholder in data quality initiatives. Accurate and complete safety data enables healthcare providers to make informed prescribing decisions and patients to understand potential risks.

The impact of inadequate pharmacovigilance data quality extends across multiple operational and strategic dimensions:

The modern pharmacovigilance environment presents unprecedented challenges for maintaining data quality standards. Organizations must navigate an increasingly complex landscape of data sources, regulatory requirements, and technological capabilities.

Multiple Data Sources create significant complexity as safety information flows from diverse channels including electronic health records, social media platforms, patient registries, clinical trials, and spontaneous reporting systems. Each source presents unique data quality challenges and requires specialized processing capabilities.

Global Reporting Timelines vary significantly across jurisdictions, creating operational complexity for multinational pharmaceutical companies. Managing different expedited reporting requirements while maintaining data quality standards requires sophisticated systems and processes.

Data Silos and System Incompatibility prevent seamless data integration and create opportunities for quality degradation. Legacy pharmacovigilance systems often cannot communicate effectively with modern data sources, requiring manual intervention that introduces errors.

The proliferation of real-world evidence sources has exponentially increased data volumes while simultaneously raising quality expectations. Organizations struggle to process growing amounts of unstructured data while maintaining the accuracy and completeness required for regulatory compliance.

Language barriers and cultural differences in adverse event reporting create additional quality challenges as global pharmacovigilance operations expand into new markets with different medical terminology and reporting practices.

Regulatory agencies worldwide have significantly elevated their expectations for pharmacovigilance data quality, implementing new requirements and enforcement mechanisms that directly impact pharmaceutical operations.

The FDA has strengthened its focus on data integrity through updated inspection protocols and enhanced electronic submission requirements. The agency now expects pharmaceutical companies to demonstrate robust data governance frameworks and implement automated quality controls throughout their pharmacovigilance systems.

The European Medicines Agency continues to advance its EudraVigilance modernization efforts, requiring higher data quality standards for all adverse event submissions. The EMA has implemented enhanced validation rules and expects sponsors to provide more complete and accurate case narratives.

ICH guidelines have evolved to address emerging data sources and technological capabilities. Recent updates emphasize the importance of structured data entry, automated quality checks, and comprehensive data validation procedures.

These regulatory developments reflect a broader shift toward risk-based approaches to pharmacovigilance oversight, where data quality serves as a key indicator of organizational capability and regulatory compliance maturity.

Artificial intelligence and machine learning technologies are revolutionizing pharmacovigilance data quality management, offering unprecedented capabilities for automated quality control and enhancement.

AI-Driven Case Processing enables automated extraction and standardization of adverse event information from diverse sources. Machine learning algorithms can identify and correct common data quality issues, flag incomplete reports, and suggest appropriate medical coding based on narrative descriptions.

Natural Language Processing transforms unstructured text from case narratives, social media posts, and electronic health records into structured, analyzable data. Advanced NLP systems can extract key safety information, identify relevant medical concepts, and ensure consistent terminology across different data sources.

Automated Data Validation implements real-time quality checks that identify and prevent data quality issues before they impact downstream processes. These systems can validate data completeness, check for logical consistency, and ensure compliance with regulatory formatting requirements.

Predictive analytics capabilities enable proactive identification of potential data quality issues, allowing organizations to implement corrective measures before problems impact regulatory compliance or patient safety.

Establishing a comprehensive data quality framework requires systematic attention to governance, processes, technology, and human factors. Successful organizations implement integrated approaches that address all dimensions of data quality management.

Data Governance provides the foundation for sustained data quality through clear roles, responsibilities, and accountability mechanisms. Effective governance structures include executive sponsorship, dedicated data quality teams, and regular performance monitoring.

Standard Operating Procedures ensure consistent application of data quality standards across all pharmacovigilance activities. SOPs should address data collection, validation, processing, and reporting requirements with specific quality metrics and acceptance criteria.

Training and Education programs develop organizational capabilities for maintaining high data quality standards. Regular training ensures that all stakeholders understand their roles in data quality management and stay current with evolving requirements.

Quality Audits provide systematic assessment of data quality performance and identification of improvement opportunities. Both internal and external audits should evaluate data quality processes, systems, and outcomes.

Continuous Improvement processes enable organizations to adapt to changing requirements and leverage new technologies for enhanced data quality. Regular reviews of data quality metrics and feedback mechanisms drive ongoing optimization.

When outsourcing pharmacovigilance activities, maintaining data quality standards requires careful vendor selection, comprehensive oversight, and ongoing performance monitoring. Organizations must ensure that external partners meet the same quality standards applied to internal operations.

Vendor Selection Criteria should include demonstrated data quality capabilities, regulatory compliance history, technology infrastructure, and quality management systems. Prospective vendors should provide evidence of their data quality metrics and improvement initiatives.

Contract Requirements must specify detailed data quality standards, performance metrics, and accountability mechanisms. Contracts should include provisions for quality audits, corrective action procedures, and performance-based incentives.

Ongoing Oversight involves regular monitoring of vendor performance against established quality metrics, periodic audits of vendor operations, and collaborative improvement initiatives. Organizations should maintain direct visibility into vendor data quality processes and outcomes.

Successful outsourcing relationships require partnership approaches where both parties share accountability for data quality outcomes and work collaboratively to address challenges and implement improvements.

As the pharmacovigilance landscape continues to evolve, organizations with superior data quality capabilities will gain significant competitive advantages in regulatory compliance, operational efficiency, and patient safety outcomes.

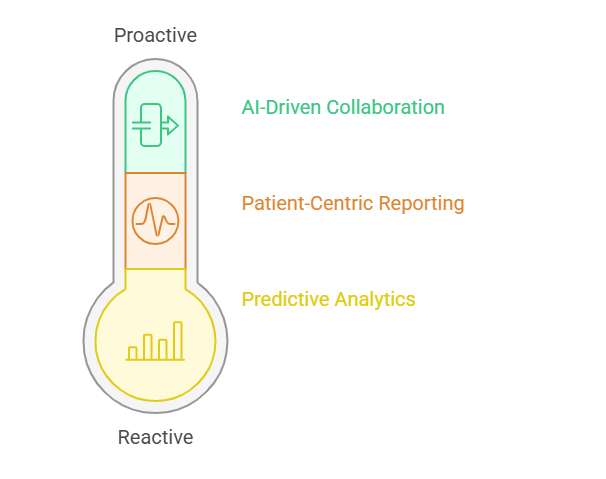

Predictive Analytics capabilities will enable organizations to anticipate safety signals before they become apparent through traditional analysis methods. High-quality historical data provides the foundation for developing accurate predictive models that can identify emerging risks.

Patient-Centric Reporting initiatives will require enhanced data quality to support personalized risk communication and shared decision-making. Organizations must capture more detailed patient information and ensure accuracy to enable meaningful individualized safety assessments.

AI-Driven Global Collaboration will facilitate real-time sharing of safety information across organizations and regulatory boundaries. High data quality standards will be essential for enabling automated analysis and decision-making in collaborative safety monitoring systems.

The organizations that invest in comprehensive data quality frameworks today will be positioned to leverage emerging technologies and regulatory initiatives that depend on reliable, accurate, and complete safety information.

Data quality in pharmacovigilance represents far more than a regulatory requirement in 2025. It serves as the foundation for effective drug safety monitoring, regulatory compliance, and patient protection. Organizations that prioritize data accuracy and completeness will not only meet current regulatory expectations but position themselves for success in an increasingly data-driven pharmaceutical environment. The investment in robust data quality frameworks today will yield dividends in improved safety outcomes, operational efficiency, and competitive advantage for years to come.

Why is data accuracy so critical in pharmacovigilance?

Data accuracy ensures that safety signals are detected promptly and correctly, enabling appropriate risk management decisions. Inaccurate data can mask real safety concerns or generate false alarms that waste resources and delay identification of genuine risks to patient safety.

How do regulators evaluate PV data quality?

Regulators assess data quality through inspections, submission reviews, and performance metrics analysis. They examine data completeness, accuracy, timeliness, and consistency while evaluating organizational processes, systems, and quality control mechanisms to ensure compliance with established standards.

What are common sources of poor-quality data in PV?

Common sources include incomplete spontaneous reports, inconsistent medical coding, delayed data entry, system integration failures, and inadequate training. Multiple data sources with different formats and quality standards also contribute to data quality challenges in modern pharmacovigilance operations.

How can AI help improve data accuracy?

AI enhances data accuracy through automated validation, intelligent data extraction from unstructured sources, predictive quality scoring, and real-time error detection. Machine learning algorithms can identify patterns in data quality issues and suggest corrections while natural language processing improves consistency.

What are best practices for maintaining data completeness?

Best practices include implementing mandatory field validations, providing clear data entry guidelines, conducting regular completeness audits, establishing follow-up procedures for incomplete reports, and training staff on regulatory requirements. Automated prompts and quality dashboards also support completeness monitoring.