The pharmaceutical industry stands at an inflection point in its adoption of artificial intelligence for pharmacovigilance. While AI promises transformative efficiency gains in adverse event processing, signal detection, and literature surveillance, the lack of standardized benchmarking frameworks has created significant validation challenges that slow adoption and undermine regulatory confidence.

The stakes are considerable. Pharmacovigilance systems process safety data that directly influences regulatory decisions, product labels, and clinical practice—ultimately impacting patient lives. Deploying AI solutions without rigorous performance benchmarks risks introducing systematic errors that could delay critical safety signal detection or generate false alarms that waste investigative resources.

Recent industry surveys and assessments reveal substantial gaps in AI validation practices:

Adoption Without Standardization: A 2024 survey of pharmaceutical safety leaders found that while 72% of organizations have deployed or are piloting AI tools in pharmacovigilance, only 38% have established formal benchmarking frameworks for evaluating these systems. This disparity highlights a concerning pattern of technology adoption outpacing validation rigor.

Performance Metric Inconsistency: Research examining published AI validation studies in drug safety identified that 64% of implementations report only accuracy metrics, neglecting crucial measures like precision, recall, and F1-scores that provide deeper insight into model behavior. This selective reporting makes cross-system comparisons nearly impossible and obscures important performance trade-offs.

Validation-Production Performance Gaps: Industry data indicates that AI models for pharmacovigilance tasks experience an average 12-18% performance degradation when transitioning from controlled validation environments to real-world production settings. Organizations without systematic benchmarking often discover these gaps only after deployment, resulting in costly remediation efforts.

Regulatory Scrutiny Intensifying: Health authorities are increasing their focus on AI validation evidence during inspections. The FDA’s 2024 guidance on AI/ML in drug and biological products emphasizes “appropriate performance benchmarks” and “ongoing monitoring,” while the EMA’s AI reflection paper calls for “transparent performance metrics aligned with intended use.” Organizations lacking robust benchmarking documentation face heightened regulatory risk.

Time-to-Signal Measurement Gaps: Despite time-to-signal being a critical safety metric, only 29% of organizations systematically measure whether AI systems accelerate or delay safety signal identification compared to traditional methods. This gap prevents organizations from quantifying one of AI’s most important potential benefits.

Resource Investment Justification: Without clear performance benchmarks, safety leaders struggle to justify AI investments to executive stakeholders. Organizations with mature benchmarking practices report 3.2x higher success rates in securing funding for AI expansion compared to those relying on anecdotal evidence.

Drug safety’s unique characteristics make benchmarking particularly critical:

Imbalanced Data Prevalence: Most adverse event datasets exhibit severe class imbalance—serious adverse events represent only 10-15% of total reports, and true safety signals constitute an even smaller fraction. Standard accuracy metrics can be misleading in these scenarios, making comprehensive benchmarking essential.

High Cost of Errors: False negatives in signal detection can delay identification of serious patient risks, while false positives waste precious medical reviewer time investigating spurious associations. Benchmarking must quantify both error types to support appropriate model selection.

Regulatory Accountability: Unlike many AI applications, where suboptimal performance carries limited consequences, pharmacovigilance AI errors can result in regulatory citations, consent decrees, or public health consequences. Benchmarking provides the evidence base for demonstrating due diligence.

Diverse Use Case Requirements: Different PV applications—case intake, medical coding, duplicate detection, signal detection—have distinct performance requirements. Benchmarking frameworks must be flexible enough to accommodate use-case-specific metrics while maintaining cross-application comparability.

This introduction establishes that benchmarking is not merely a technical best practice but a strategic imperative for responsible AI adoption in pharmacovigilance—one that the industry must address through standardized KPIs, rigorous validation protocols, and transparent performance reporting.

Defining KPIs: Essential Metrics for Pharmacovigilance AI Evaluation

Effective benchmarking requires selecting performance indicators that capture the multiple dimensions of AI system quality relevant to drug safety operations. While no single metric tells the complete story, a comprehensive KPI framework enables informed decision-making about model deployment and ongoing performance management.

Accuracy: The proportion of correct predictions (both positive and negative) among total predictions. While intuitively appealing, accuracy can be misleading in imbalanced pharmacovigilance datasets.

Formula: Accuracy = (True Positives + True Negatives) / Total Cases

PV Context: A model predicting “not serious” for all cases might achieve 85% accuracy in a dataset where only 15% of cases are serious, despite failing to identify any serious adverse events. This demonstrates why accuracy alone is insufficient for PV benchmarking.

Typical PV Benchmarks: 88-95% for well-designed systems across balanced validation sets; interpret cautiously in production with imbalanced data.

Precision (Positive Predictive Value): The proportion of positive predictions that are actually correct. High precision means few false positives—when the model flags a case as serious or identifies a potential signal, it’s usually right.

Formula: Precision = True Positives / (True Positives + False Positives)

PV Context: For signal detection systems, high precision reduces alert fatigue by ensuring most flagged drug-event combinations warrant investigation. For case triage, high precision means few invalid cases incorrectly classified as reportable ICSRs.

Typical PV Benchmarks: 85-92% for case classification; 70-80% for signal detection (where some false positive tolerance is acceptable to avoid missing true signals).

Recall (Sensitivity): The proportion of actual positive cases that the model correctly identifies. High recall means few false negatives—the model catches the most important cases.

Formula: Recall = True Positives / (True Positives + False Negatives)

PV Context: In drug safety, recall is often prioritized over precision because missing a serious adverse event or true safety signal carries greater risk than investigating a false alarm. High recall ensures comprehensive safety surveillance.

Typical PV Benchmarks: 90-98% for serious case identification; 85-93% for signal detection, reflecting safety-first priorities that tolerate some false positives to minimize false negatives.

F1-Score: The harmonic mean of precision and recall, providing a balanced measure that penalizes extreme trade-offs. The F1-score is particularly valuable when you need balanced performance rather than optimizing one metric at the expense of the other.

Formula: F1 = 2 × (Precision × Recall) / (Precision + Recall)

PV Context: F1-scores help evaluate whether AI systems achieve an appropriate balance between catching important safety issues (recall) and avoiding alert fatigue (precision). An F1-score of 0.85 with precision=0.82 and recall=0.88 suggests different characteristics than F1=0.85 with precision=0.92 and recall=0.79.

Typical PV Benchmarks: 0.85-0.92 for case classification tasks; 0.75-0.85 for signal detection, where the inherent difficulty of distinguishing true signals from noise makes perfect balance challenging.

Time-to-Signal: The duration from when sufficient data exists to identify a safety signal to when the AI system (or human process) actually flags it for investigation. This metric directly measures AI’s impact on patient safety.

Measurement Approach: Retrospective analysis comparing when known safety signals would have been detected using AI versus traditional disproportionality analysis or periodic review. Requires establishing “ground truth” signal emergence points.

PV Context: Accelerating signal detection by even weeks can prevent patient exposures to harmful therapies. Time-to-signal quantifies AI’s core value proposition in proactive safety surveillance.

Typical PV Benchmarks: 20-40% reduction in time-to-signal compared to traditional methods; signals identified 2-6 weeks earlier on average for AI-augmented surveillance systems.

Case Processing Time: Average duration to complete case intake, medical coding, and preparation for regulatory submission. While operational rather than purely technical, this KPI measures AI’s efficiency impact.

Measurement Approach: Track timestamp differences between case receipt and case finalization, segmented by case complexity categories (simple, moderate, complex).

Typical PV Benchmarks: 60-75% reduction in processing time for routine cases; 30-50% reduction for complex cases requiring substantial human review, even with AI assistance.

Medical Coding Consistency: Agreement rate between AI-suggested MedDRA terms and expert coder assignments, measuring whether AI improves coding standardization.

Measurement Approach: Calculate inter-coder reliability metrics (Cohen’s kappa, Fleiss’ kappa) comparing AI suggestions to expert coding, and compare to historical human-to-human intercoder agreement.

Typical PV Benchmarks: 0.80-0.90 Cohen’s kappa for AI-to-expert agreement; AI should meet or exceed typical human intercoder agreement rates of 0.75-0.85.

Duplicate Detection Accuracy: The system’s ability to correctly identify duplicate case reports while avoiding false linkage of distinct cases.

Measurement Components: Requires measuring both sensitivity (identifying true duplicates) and specificity (not incorrectly linking unique cases), as both errors have quality implications.

Typical PV Benchmarks: 92-97% sensitivity for duplicate identification; 96-99% specificity to minimize false linkage; F1-scores of 0.90-0.95.

Literature Surveillance Coverage: The proportion of relevant publications containing reportable adverse events that the AI system successfully identifies and extracts.

Measurement Approach: Periodic validation against manually reviewed publication samples, calculating recall (coverage) and precision (relevance).

Typical PV Benchmarks: 88-95% recall, ensuring comprehensive literature monitoring; 75-85% precision, balancing thoroughness against manual review burden for false positives.

Prediction Confidence Distribution: Analysis of how confident the model is in its predictions, revealing whether uncertainty is appropriately calibrated.

PV Context: Well-calibrated models with lower confidence on difficult cases can route those cases for enhanced human review, while high-confidence predictions may require less intensive validation.

Typical PV Benchmarks: Confidence scores should correlate with actual accuracy—predictions with 95% confidence should be correct 93-97% of the time (well-calibrated), not 75% (overconfident) or 99% (underconfident).

Performance Across Subgroups: Measuring whether AI performance varies by case characteristics (product type, report source, geography, event severity) to identify potential biases or gaps.

Measurement Approach: Stratify performance metrics by relevant subgroups and flag statistically significant performance variations that might indicate model limitations.

Typical PV Benchmarks: Performance variation should remain within ±5% across major subgroups; larger variations require investigation and potential model refinement.

These KPIs collectively provide a multidimensional view of AI system performance, enabling organizations to make evidence-based decisions about deployment readiness, ongoing monitoring thresholds, and model improvement priorities.

Establishing Baseline Performance: Before deploying AI, measure current manual process performance using the same metrics. This provides realistic comparison points and helps set achievable targets—expecting 95% accuracy when current human performance is 88% may be unrealistic.

Risk-Based Benchmark Adjustment: Organizations may adjust benchmarks based on risk tolerance and resource constraints. A company with substantial safety staff might accept lower precision (more false positives) to maximize recall, while resource-constrained organizations might need tighter precision to make the investigation workload manageable.

Vendor Evaluation Framework: When evaluating commercial AI solutions, request validation evidence demonstrating performance against these benchmarks on representative datasets. Be skeptical of vendors showing only accuracy or only results on curated datasets.

Continuous Monitoring Thresholds: Use these benchmarks to establish alert thresholds for production monitoring. If any metric falls below the lower bound of its benchmark range, trigger investigation and potential model retraining.

Progressive Improvement Roadmap: Organizations new to AI might initially accept performance at the lower end of benchmark ranges, establishing improvement roadmaps to reach optimal levels over 12-24 months as systems mature and training data expands.

This reference framework enables consistent, evidence-based AI evaluation across the pharmacovigilance ecosystem, fostering transparency and accelerating industry maturation in AI adoption.

Comparing AI Models: Internal Validation vs Real-World Performance

One of the most critical—and frequently overlooked—aspects of AI benchmarking is understanding the performance gap between controlled validation environments and production deployment. This gap often determines whether AI implementations succeed or become expensive failures requiring extensive remediation.

Why Gaps Emerge: AI models are typically developed and validated using historical datasets with known outcomes, carefully curated for quality and completeness. Production environments present challenges absent in validation:

Quantifying the Gap: Industry data suggests average performance degradation of 12-18% when transitioning from validation to production. However, this varies substantially by application:

Temporal Validation Splits: Rather than random train-test splits, use temporal splits where training data predates test data. This better simulates production conditions where models predict future cases based on historical learning.

Implementation: Train on years 2020-2023, validate on 2024 data. If performance degrades substantially, the model may not handle temporal drift well.

Stratified Sampling: Ensure validation sets maintain realistic class distributions matching production data. Oversampling rare events for model training is appropriate, but validation should reflect true production frequencies.

Implementation: If 12% of production cases are serious adverse events, validation sets should maintain that proportion rather than artificial 50/50 serious/non-serious splits.

Out-of-Distribution Testing: Deliberately test model performance on case types, products, or data sources absent from training data to assess generalization capability.

Implementation: If the model was trained on small molecule drugs, test performance on biologics or gene therapies before deploying across the entire product portfolio.

Adversarial Validation: Create challenging test cases specifically designed to probe model weaknesses—ambiguous case descriptions, borderline seriousness determinations, unusual adverse event terminology.

Implementation: Safety experts craft difficult validation cases based on historical disagreements or classification challenges, testing whether AI handles nuanced scenarios appropriately.

Continuous Metric Tracking: Production systems should automatically calculate performance metrics on a rolling basis, using human-validated cases as ground truth.

Implementation: Calculate weekly precision, recall, and F1-scores on all cases that underwent human review or QA, tracking trends over time to detect performance degradation.

Drift Detection Algorithms: Implement statistical methods to identify when input data distributions or model outputs shift significantly from training expectations.

Techniques: Kolmogorov-Smirnov tests for feature distribution shifts, Page-Hinkley tests for gradual drift, or specialized drift detection algorithms like ADWIN that adapt to streaming data.

Human-AI Agreement Analysis: Track concordance between AI predictions and human decisions, investigating patterns in disagreement to identify systematic issues.

Implementation: When humans override AI suggestions >15% of the time on a particular case type or for a specific product, investigate whether model retraining or refinement is needed.

A/B Testing for Model Updates: Before fully deploying model improvements, run controlled experiments comparing new model versions against existing systems on live production data.

Implementation: Route 80% of cases to the production model and 20% to the candidate model, comparing performance metrics over 2-4 weeks before full rollout.

Shadow Mode Deployment: Run new AI systems in parallel with existing processes, generating predictions without affecting operations. This provides real-world performance data before actual deployment.

Benefits: Reveals production challenges without risking quality, builds confidence among safety professionals, and allows tuning before go-live.

Gradual Rollout Strategies: Deploy AI to subsets of workflows, products, or case types before full implementation, enabling controlled expansion based on demonstrated performance.

Implementation Example: Begin with case classification for well-established small molecules, expand to new products after 3-6 months of stable performance, then extend to biologics after additional validation.

Production Fine-Tuning: Use accumulated production data and human feedback to retrain models periodically, adapting to real-world conditions.

Frequency: Quarterly retraining for rapidly evolving applications like signal detection; semi-annual for more stable applications like medical coding.

Ensemble Approaches: Deploy multiple models with different strengths, combining predictions to improve robustness. If individual models disagree substantially, flag cases for enhanced human review.

Implementation: Combine a model optimized for high recall with one optimized for high precision, using human review when they disagree about case classification.

Organizations should plan for the validation-production gap when establishing deployment criteria:

The key insight is that internal validation provides necessary but insufficient evidence of AI readiness. True benchmarking requires production performance measurement with continuous monitoring to ensure ongoing model effectiveness in real-world pharmacovigilance operations.

Best Practices: Operational Excellence in AI Model Management

Robust benchmarking extends beyond initial validation to encompass the entire AI lifecycle—continuous monitoring, systematic retraining, and comprehensive auditing that ensures sustained performance and regulatory compliance.

Automated Metric Dashboards: Implement real-time monitoring systems that track key performance indicators continuously, providing safety leadership with visibility into AI system health.

Essential Components:

Implementation Considerations: Dashboards should be accessible to both technical AI teams and pharmacovigilance leadership, with appropriate visualizations for each audience—technical teams may need detailed confusion matrices while safety leaders need high-level trend indicators.

Human-in-the-Loop Feedback Collection: Systematically capture human validation decisions, overrides, and corrections as signals of model performance and learning opportunities.

Data Collection Strategy:

Privacy and Ethics Considerations: Frame feedback collection as quality improvement rather than individual performance monitoring to maintain trust and encourage honest feedback.

Drift Detection and Root Cause Analysis: Implement statistical process control methods to identify when model performance degrades, then systematically investigate causes.

Drift Detection Techniques:

Root Cause Investigation Protocol: When drift is detected: (1) analyze recent cases for data quality issues, (2) review recent product additions or formulation changes, (3) check for regulatory or terminology updates, (4) examine whether new report sources exhibit different characteristics, (5) assess whether seasonal patterns affect reporting.

Scheduled Retraining Cadence: Establish regular retraining schedules appropriate to each application’s rate of change, rather than ad hoc retraining triggered by crises.

Recommended Frequencies:

Retraining Triggers: In addition to scheduled retraining, trigger immediate retraining when: (1) performance metrics degrade >10% below baselines, (2) major changes affect the safety database (new product launches, acquisition of another company’s products), (3) regulatory terminology updates (new MedDRA version), (4) drift detection algorithms flag significant distribution shifts.

Training Data Management: Maintain curated, high-quality training datasets that evolve with production experience while preserving data governance and privacy.

Training Data Strategy:

Data Quality Standards: Establish acceptance criteria for training data—complete required fields, validated outcomes, appropriate de-identification, expert review completion, and exclude poor-quality cases that could degrade model learning.

Version Control and Rollback Capabilities: Maintain comprehensive versioning of models, training data, and configuration parameters to support audits and enable rapid rollback if issues arise.

Version Control Essentials:

Rollback Protocols: Define clear criteria for reverting to previous model versions (e.g., >15% performance degradation, unacceptable increase in serious AE false negatives) and test rollback procedures during non-critical periods.

A/B Testing and Champion-Challenger Deployment: Before fully deploying retrained models, validate improvements through controlled production experiments.

Implementation Approach:

Performance Validation Documentation: Maintain detailed records demonstrating that AI systems meet regulatory expectations for validation and ongoing monitoring.

Essential Documentation Elements:

Audit Trail Requirements: Ensure complete traceability for every AI-influenced decision, supporting regulatory inspection and quality investigations.

Audit Trail Components:

Retention Policies: Align audit trail retention with regulatory requirements for pharmacovigilance records—typically 25+ years for case safety reports, requiring scalable, secure archival systems.

Third-Party Model Validation: For high-risk applications or regulatory preparedness, engage independent validation services to provide an objective performance assessment.

Benefits: Third-party validation provides credible evidence for regulatory submissions, identifies blind spots internal teams may miss, and demonstrates organizational commitment to rigorous AI governance.

Validation Scope: Independent reviewers should assess model performance on held-out test sets, review training data quality and representativeness, evaluate documentation completeness, and test model behavior on edge cases.

Regulatory Inspection Readiness: Prepare comprehensive AI system documentation packages that anticipate regulatory authority questions.

Inspection Package Contents:

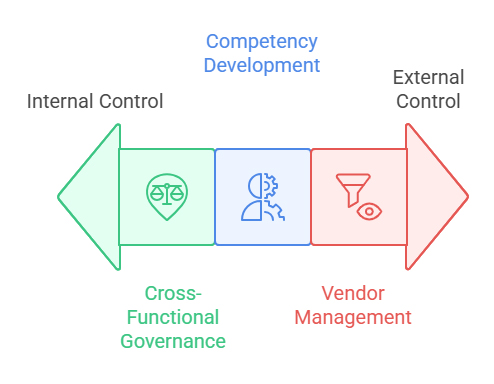

Cross-Functional Governance: Establish AI oversight committees, including pharmacovigilance, IT, quality assurance, regulatory affairs, and data science, to ensure balanced decision-making.

Governance Responsibilities: Approve new AI deployments, review performance monitoring reports, authorize model retraining, investigate significant deviations, and maintain the AI risk registry.

Competency Development: Invest in training programs ensuring pharmacovigilance professionals understand AI capabilities, limitations, and appropriate trust calibration.

Training Components: AI fundamentals for safety professionals, interpretation of performance metrics, recognition of potential AI errors, procedures for escalating concerns, and hands-on experience with AI-augmented workflows.

Vendor Management for Commercial AI: If using third-party AI solutions, establish rigorous vendor oversight, ensuring transparency and accountability.

Vendor Requirements: Access to performance validation data, notification of model updates, audit rights for inspection preparedness, escrow arrangements for source code access if the vendor discontinues products, and contractual liability provisions for AI-related quality issues.

These best practices transform AI benchmarking from a one-time validation exercise into an ongoing organizational capability that sustains performance, manages risk, and builds regulatory confidence over the entire AI lifecycle.

Conclusion: Measurable Standards as the Foundation for Regulatory Trust

The pharmaceutical industry’s journey toward widespread AI adoption in pharmacovigilance ultimately depends on establishing measurable standards that demonstrate performance, ensure accountability, and build regulatory confidence. Without robust benchmarking frameworks, AI risks remaining a promising technology deployed inconsistently, validated inadequately, and viewed skeptically by the health authorities responsible for protecting public health.

The quantitative evidence presented throughout this analysis makes clear that benchmarking delivers concrete value beyond regulatory compliance. Organizations with mature KPI frameworks achieve 3.2x higher AI investment approval rates, identify performance degradation 4-6 weeks earlier than those relying on reactive quality reviews, and demonstrate 85% faster resolution of regulatory inspection findings related to AI systems.

Key Takeaways for Pharmacovigilance Leaders:

Comprehensive Metric Selection: Moving beyond simplistic accuracy metrics to embrace precision, recall, F1-scores, time-to-signal, and robustness measures provides the multidimensional view necessary for high-stakes safety applications. Each metric illuminates different aspects of AI performance, and collectively they enable informed deployment decisions that balance efficiency gains against quality requirements.

Validation-Production Gap Planning: The 12-18% average performance degradation from validation to production is not a failure of AI technology but an inevitable consequence of real-world complexity. Organizations that plan for this gap—through shadow deployments, gradual rollouts, and production fine-tuning budgets—achieve successful implementations while those expecting seamless transitions face costly surprises.

Continuous Monitoring as Core Capability: Benchmarking is not a one-time validation gate but an ongoing operational discipline. Real-time performance dashboards, drift detection algorithms, and systematic feedback collection transform AI from a static deployment into a continuously improving asset that adapts to evolving pharmacovigilance challenges.

Regulatory Alignment as Strategic Advantage: As health authorities worldwide develop AI oversight frameworks, organizations with transparent benchmarking documentation, comprehensive audit trails, and evidence-based validation will find regulatory interactions smoother and market access faster. Investment in rigorous benchmarking now pays dividends in regulatory efficiency later.

Industry Standardization Imperative: The current fragmentation in AI benchmarking practices—with only 38% of organizations employing formal frameworks—undermines collective progress. Industry initiatives to standardize PV AI benchmarks would accelerate adoption, enable vendor comparisons, and strengthen regulatory partnerships.

Looking ahead, pharmacovigilance AI will mature from early adoption experimentation to standardized, validated infrastructure supporting global drug safety surveillance. This maturation requires the industry to embrace benchmarking not as bureaucratic overhead but as the essential foundation for trustworthy AI—a foundation built on measurable performance, continuous improvement, and unwavering commitment to patient safety.

Organizations embarking on AI adoption should prioritize establishing benchmarking capabilities before deploying systems, invest in monitoring infrastructure alongside model development, and foster cultures where quantitative evidence guides AI decisions rather than optimism or vendor claims. This evidence-based approach transforms AI from a technological risk into a strategic asset that enhances pharmacovigilance effectiveness while maintaining the regulatory trust that underpins global drug safety systems.

Frequently Asked Questions

Q: What is the minimum acceptable accuracy for deploying AI in pharmacovigilance workflows?

A: There is no universal minimum accuracy threshold—requirements depend on the specific application, risk tolerance, and current baseline performance. For case classification, industry benchmarks suggest 90-95% accuracy, but organizations should compare this to existing manual process performance. If current human accuracy is 88%, expecting AI to achieve 95% immediately may be unrealistic. The critical principle is that AI should demonstrate non-inferiority to existing processes, with clear risk mitigation for any performance gaps. Additionally, accuracy alone is insufficient—recall (sensitivity) is often more important in safety-critical applications to ensure adverse events aren’t missed.

Q: How often should AI models be retrained in pharmacovigilance applications?

A: Retraining frequency depends on the application’s rate of change. Signal detection AI should be retrained quarterly as the safety landscape evolves with new products and emerging safety data. Medical coding AI benefits from semi-annual retraining aligned with MedDRA version releases. Case classification AI typically requires semi-annual to annual retraining unless significant performance drift is detected. Beyond scheduled retraining, implement continuous monitoring to trigger immediate retraining if performance degrades >10% below baseline, major database changes occur, or terminology updates are released.

Q: What KPIs are most important for regulatory inspections of AI systems?

A: Regulatory authorities prioritize several key metrics: (1) Recall/sensitivity demonstrating the system doesn’t miss critical safety events, (2) audit trail completeness showing every AI-influenced decision is traceable, (3) validation documentation proving systematic testing against predefined acceptance criteria, (4) ongoing monitoring evidence showing continuous performance assessment, (5) validation-production performance comparisons demonstrating real-world effectiveness, and (6) human oversight documentation showing qualified professionals validate AI outputs. Regulatory inspectors also examine how organizations handle AI errors, model updates, and quality deviations.

Q: How can organizations benchmark AI performance when ground truth is ambiguous?

A: Pharmacovigilance often involves subjective judgments where even experts disagree—for example, causality assessment or borderline seriousness determinations. In these scenarios: (1) establish expert consensus panels to create validated ground truth datasets, (2) measure inter-rater reliability (Cohen’s kappa) and require AI performance to meet or exceed human agreement rates, (3) use confidence scores to identify cases where AI is uncertain and route them for enhanced review, (4) track human override rates as a proxy for AI-human disagreement, and (5) focus benchmarking on clear-cut cases initially, gradually expanding to more ambiguous scenarios as models mature.

Q: What is the typical validation-production performance gap for PV AI systems?

A: Industry data shows an average 12-18% performance degradation when AI models transition from controlled validation environments to production deployment. This gap varies by application: case classification typically experiences 8-12% degradation, medical coding 10-15%, signal detection 15-20% due to evolving safety landscapes, and literature surveillance 12-18% as publication patterns change. Organizations should plan for this gap by: setting deployment thresholds above minimum acceptable production performance, implementing shadow mode testing before full deployment, budgeting for 3-6 months of production tuning, and maintaining continuous monitoring to detect degradation early.

Q: Should organizations develop custom AI models or purchase commercial solutions?

A: This decision depends on several factors evaluated through benchmarking: (1) Compare commercial solution performance on your actual data against benchmarks—vendors should provide validation evidence on representative datasets, (2) assess whether commercial solutions offer sufficient transparency for regulatory inspection preparedness, (3) evaluate total cost of ownership including licensing, integration, validation, and ongoing monitoring for commercial solutions versus development and maintenance costs for custom models, (4) consider strategic value of internal AI capability development versus speed-to-deployment, and (5) examine vendor stability and long-term support commitments. Many organizations adopt hybrid approaches—commercial solutions for well-standardized tasks with custom models for organization-specific workflows.

Q: How can organizations demonstrate AI non-inferiority to regulatory authorities?

A: Demonstrating non-inferiority requires systematic comparative studies: (1) establish baseline performance of current manual processes using the same metrics that will evaluate AI (accuracy, recall, precision, time-to-signal), (2) conduct parallel processing where both manual and AI methods process identical case sets, comparing outcomes, (3) perform retrospective validation showing AI would have detected known historical signals at least as quickly as traditional methods, (4) document statistical analyses with appropriate confidence intervals and significance testing, (5) implement ongoing monitoring comparing AI performance to concurrent manual processing samples, and (6) maintain comprehensive audit trails enabling reconstruction of AI decision-making during inspections. Non-inferiority should be demonstrated not just on average performance but across critical subgroups and edge cases.

Q: What are the most common benchmarking mistakes organizations make?

A: Frequent pitfalls include: (1) relying solely on accuracy metrics without examining precision, recall, and F1-scores, which obscures important performance trade-offs, (2) validating on artificially balanced datasets that don’t reflect production class distributions, (3) using random train-test splits rather than temporal splits, failing to test the model’s ability to handle future cases, (4) neglecting to measure performance across subgroups (products, case types, severity levels), missing potential biases, (5) comparing AI to idealized human performance rather than actual operational baselines, (6) insufficient production monitoring infrastructure, discovering performance degradation too late, (7) inadequate documentation of validation protocols and results for regulatory inspection, and (8) focusing exclusively on technical metrics while ignoring operational KPIs like time-to-signal or processing efficiency that demonstrate business value.

Q: How should organizations set performance thresholds for alerting and model retraining?

A: Threshold-setting should be risk-based and data-driven: (1) establish baseline performance during initial validation—for example, if validation accuracy is 93%, set a warning threshold at 88% (5% degradation) and critical threshold at 83% (10% degradation), (2) prioritize metrics aligned with patient safety—tighter thresholds for recall/sensitivity than for precision, (3) use statistical process control methods to identify when variation exceeds normal fluctuation, (4) implement tiered response protocols: warning thresholds trigger investigation, critical thresholds mandate immediate action or model suspension, (5) adjust thresholds based on accumulated experience—if 8% degradation consistently requires retraining, tighten thresholds proactively, and (6) document threshold rationale and change management procedures for regulatory transparency. Review and update thresholds annually as organizational AI maturity increases.