As artificial intelligence becomes deeply embedded in pharmacovigilance workflows—from automated adverse event detection to signal prioritization—the demand for transparency has evolved from a nice-to-have feature to a regulatory and operational necessity. The black-box nature of traditional deep learning models, while powerful in identifying patterns across massive datasets, presents significant challenges when medical reviewers, regulators, and patients need to understand why a particular safety signal was flagged or how an AI system arrived at its conclusions.

The market reflects this transformation. The global Explainable AI (XAI) market was valued at approximately $7.94 billion in 2024 and is projected to reach $30.26 billion by 2032, growing at a compound annual growth rate (CAGR) of 18.2%. Within healthcare specifically, XAI adoption is accelerating at an even faster pace—Explainable AI in medical imaging alone is forecasted to grow at 30.0% CAGR over the next decade. Three converging forces drive this surge: escalating regulatory scrutiny, the need for clinical trust in AI-assisted decisions, and mounting evidence that transparency directly correlates with safer, more effective pharmacovigilance outcomes.

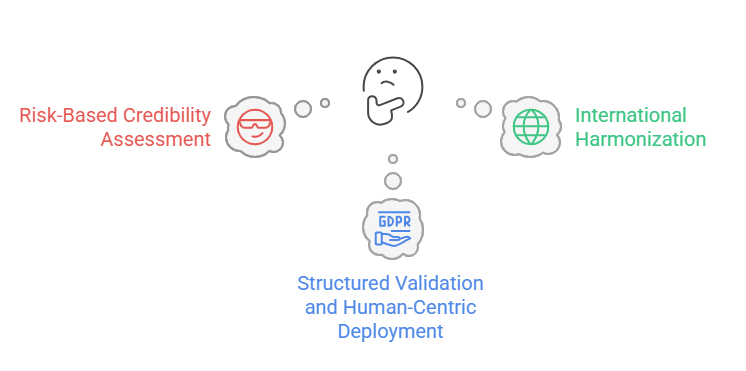

For pharmaceutical companies and Contract Research Organizations (CROs), approximately 80% of professionals in the pharmaceutical and life sciences sectors now utilize AI in some capacity. Yet, the audit trail and interpretability requirements from regulatory bodies have intensified dramatically. The European Medicines Agency’s 2024 Reflection Paper on AI in the Medicinal Product Lifecycle explicitly emphasizes transparency, accessibility, validation, and continuous monitoring of AI systems. Similarly, the FDA’s January 2025 draft guidance on “Considerations for the Use of Artificial Intelligence to Support Regulatory Decision-Making” introduced a risk-based credibility assessment framework that demands clear documentation of how AI models reach their conclusions.

The stakes are particularly high in adverse event detection. Unlike consumer applications, where AI errors might cause minor inconveniences, pharmacovigilance mistakes can delay critical safety interventions, compromise patient well-being, or lead to unnecessary market withdrawals of beneficial medications. Explainability is no longer optional—it’s foundational to the future of AI-powered drug safety.

The Importance of Transparency: Regulatory and Ethical Imperatives

Black-box AI models—neural networks and ensemble methods that deliver predictions without revealing their internal decision logic—have demonstrated remarkable accuracy in predicting adverse drug reactions. However, their opacity creates three critical vulnerabilities in pharmacovigilance contexts:

Beyond regulatory compliance, explainability addresses fundamental ethical obligations. When an AI system fails to detect a serious adverse event—or generates false positives that divert limited resources—stakeholders deserve clear accountability chains. Explainable AI enables:

The EMA’s reflection paper emphasizes a human-centric approach, requiring that AI deployment “comply with existing legal requirements, ethical standards, and fundamental rights.” This philosophy recognizes that pharmacovigilance, at its core, is about protecting human health—a mission that demands transparency at every stage.

Several XAI methodologies have emerged as particularly relevant for adverse event detection and pharmacovigilance applications. Each offers distinct advantages depending on the specific use case, model architecture, and stakeholder needs.

SHAP leverages game theory principles to calculate each feature’s contribution to a prediction by examining all possible feature combinations. In pharmacovigilance contexts, SHAP excels at revealing why certain drug-adverse event pairs receive high risk scores.

Application Example: A tree-based machine learning model trained on administrative health datasets can predict acute coronary syndrome (ACS) adverse outcomes with high accuracy. SHAP analysis demonstrated that drug dispensing features for specific NSAIDs (rofecoxib, celecoxib) had greater-than-zero contributions to ACS predictions, correctly identifying drugs later confirmed to elevate cardiovascular risk. The analysis also revealed that demographic factors like age and sex were consistently ranked as important confounders—exactly as clinical pharmacology would predict.

Advantages:

Considerations: SHAP assumes feature independence, which may oversimplify complex drug-drug interactions or comorbidity relationships. When features exhibit high collinearity (common in pharmacovigilance datasets), SHAP values should be interpreted cautiously and validated across multiple model architectures.

LIME creates simplified, interpretable surrogate models that approximate a complex model’s behavior for individual predictions. It perturbs input features and observes how predictions change, building a local linear model around the instance of interest.

Application Example: For a patient experiencing multiple potential adverse events while on a complex medication regimen, LIME can identify which specific medications and patient characteristics most strongly influenced the AI system’s classification of a particular event as serious versus non-serious. This instance-level clarity helps medical reviewers focus their assessment efforts on the most relevant factors.

Advantages:

Considerations: LIME explanations are limited to local regions and may not capture global model behavior. The perturbation-based approach can create unrealistic feature combinations (e.g., impossible age-medication pairings), potentially yielding explanations that don’t reflect real-world pharmacology. Validation studies suggest SHAP slightly outperforms LIME in pharmacovigilance applications, but LIME remains valuable for rapid, reviewable explanations of individual cases.

Attention mechanisms—particularly in natural language processing models used for processing adverse event narratives—reveal which portions of unstructured text the model focused on when making classifications.

Application Example: When processing FAERS (FDA Adverse Event Reporting System) narratives to identify suspected drugs causing adverse reactions, attention-weighted models can highlight specific phrases, co-medication mentions, or temporal sequences that triggered safety signals. Graph neural networks with attention mechanisms have successfully identified drug adverse reactions from social media data (Twitter), with attention weights revealing the specific posts and linguistic patterns that correlated with known adverse events.

Advantages:

Considerations: Attention weights don’t always equate to causal importance; high attention may reflect data artifacts rather than the true signal. Requires careful validation against pharmacological knowledge to ensure attention patterns align with biological plausibility.

Beyond instance-level explanations, global techniques like permutation importance, partial dependence plots, and accumulated local effects reveal overall model behavior patterns.

Application Example: In a machine learning model predicting whether adverse reactions lead to serious clinical outcomes (death, hospitalization, disability), global feature importance analysis can identify which drug characteristics (molecular structure properties, known interaction profiles) and which patient factors (age, comorbidities, concomitant medications) most consistently drive risk stratification across thousands of cases. This informs both model refinement and broader pharmacovigilance policy decisions about which drug-patient combinations warrant enhanced monitoring.

Regulatory Outlook: Converging Toward Explainability Standards

Global regulatory authorities are rapidly establishing frameworks that elevate explainability from best practice to a mandatory requirement for AI systems supporting drug safety decisions.

The FDA’s January 2025 draft guidance introduces a credibility assessment framework specifically for AI models generating information to support regulatory decisions about drug safety, effectiveness, or quality. Key elements include:

In June 2025, the FDA launched “Elsa,” a generative AI tool within its high-security environment designed to summarize adverse events, support safety profile assessments, and expedite clinical protocol reviews—demonstrating the agency’s commitment to AI adoption paired with appropriate transparency mechanisms.

The EMA’s September 2024 Reflection Paper provides comprehensive guidance across the entire medicinal product lifecycle:

In March 2025, the EMA issued its first qualification opinion on AI methodology in clinical trials, accepting evidence generated by an AI tool for diagnosing inflammatory liver disease—a landmark demonstrating regulatory readiness to embrace AI when appropriate explainability standards are met.

While the International Council for Harmonization (ICH) has not yet published specific XAI guidelines, the organization’s focus on harmonizing drug development standards positions it to play a critical role:

The trajectory is clear: by 2026-2027, explainability will transition from a competitive differentiator to a baseline compliance requirement for AI-powered pharmacovigilance systems seeking regulatory approval in major markets.

Future Possibilities: Emerging Trends in XAI for Drug Safety

As the regulatory landscape solidifies and XAI methodologies mature, several transformative trends are poised to reshape pharmacovigilance practices over the next 3-5 years.

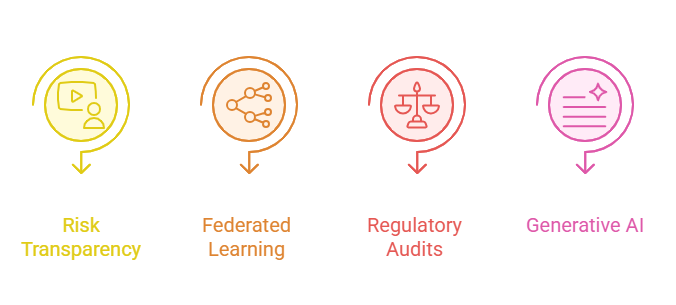

The future lies not in choosing between accuracy and interpretability, but in hybrid architectures that deliver both:

Next-generation pharmacovigilance platforms will integrate explainability into operational workflows:

Privacy-preserving federated learning allows multiple organizations to collaboratively train AI models without sharing sensitive patient data. The next frontier is making federated models explainable:

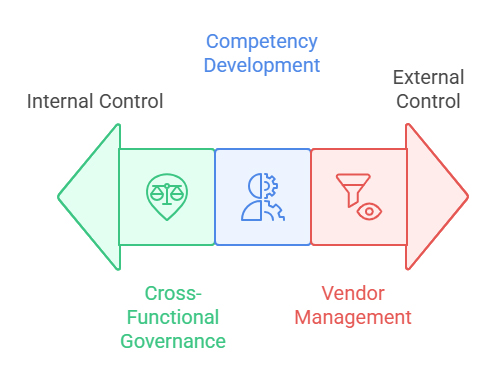

Anticipating regulatory requirements, the industry will develop standardized explainability audit protocols:

While generative AI (large language models, multimodal systems) poses unique explainability challenges, emerging approaches show promise:

The FDA’s active exploration of generative AI applications (evidenced by its 2024 Digital Health Advisory Committee discussions on “Total Product Lifecycle Considerations for Generative AI-Enabled Devices”) signals openness to these technologies when appropriate explainability frameworks accompany them.

Conclusion

Explainable AI represents not merely a technical evolution in pharmacovigilance but a fundamental transformation in how pharmaceutical companies, regulators, and healthcare providers approach drug safety. The convergence of market growth—XAI adoption accelerating at 18-30% annually—with regulatory mandates from the FDA, EMA, and emerging global standards creates an unambiguous mandate: transparency is the price of entry for AI in drug safety.

The techniques discussed—SHAP, LIME, attention mechanisms, and emerging hybrid frameworks—provide the methodological toolkit to achieve this transparency without sacrificing predictive performance. The regulatory outlook, characterized by risk-based credibility assessments and human-centric deployment principles, offers clear pathways for compliant implementation.

For organizations at the forefront of AI-driven pharmacovigilance, the strategic imperative is clear: invest in explainability now, not as a retrofit to satisfy auditors later. Build transparency into system architecture from inception. Train teams to interpret and validate AI explanations. Engage early with regulators to demonstrate commitment to responsible innovation.

The future of drug safety is intelligent, adaptive, and transparent. Explainable AI is the bridge between algorithmic power and human wisdom—ensuring that as AI systems grow more capable, they remain trustworthy partners in the mission to protect patient health.

Frequently Asked Questions

Q1: What is the difference between AI interpretability and explainability in pharmacovigilance?

Interpretability refers to the degree to which humans can understand the predictions of an AI model, while explainability relates to the ability to describe the internal logic and mechanisms the model uses to reach decisions. In pharmacovigilance, both are essential: interpretability allows medical reviewers to trust AI-generated safety signals, while explainability enables validation of the model’s reasoning against established pharmacological principles. Techniques like SHAP provide both by showing which features influenced predictions and quantifying their contributions.

Q2: Are explainable AI models less accurate than black-box models?

Not necessarily. While historically there was a perceived trade-off between interpretability and accuracy, modern XAI techniques often achieve comparable predictive performance. Ensemble methods can combine interpretable components with complex models to balance both objectives. More importantly, in regulated pharmacovigilance contexts, a highly accurate but unexplainable model may be operationally useless if regulators won’t accept it or medical reviewers won’t trust it. The most “accurate” model is the one that combines strong predictive power with sufficient transparency to be actionable in practice.

Q3: How are the FDA and EMA aligning on explainable AI requirements?

While regulatory approaches differ in specifics, both agencies converge on core principles: risk-based oversight proportional to potential patient harm, requirements for transparency and documentation, continuous performance monitoring, and human oversight. The FDA’s January 2025 guidance emphasizes credibility assessment frameworks, while the EMA’s 2024 Reflection Paper provides detailed lifecycle guidance. Both reference Good Machine Learning Practice principles developed jointly with international partners. Organizations developing AI systems for multi-regional approval should design for the most stringent requirements (typically the EU AI Act/EMA standards) to ensure global compliance.

Q4: Which explainability technique is best for adverse event detection?

The optimal technique depends on the specific use case. For tabular data (patient demographics, lab values, medication histories), SHAP values provide comprehensive, model-agnostic explanations suitable for both instance-level case reviews and global pattern analysis. For unstructured narrative text (FAERS reports, clinical notes), attention visualization mechanisms excel at showing which text passages influenced classifications. For rapid, intuitive explanations of individual cases, LIME offers accessible visualizations. Leading pharmacovigilance systems increasingly implement multiple XAI techniques, allowing reviewers to triangulate understanding across different explanation modalities and validate that explanations are consistent and clinically plausible.