For decades, pharmacovigilance has operated primarily as a reactive discipline—identifying safety signals after adverse events accumulate, investigating risks once reporting patterns emerge, and implementing risk mitigation only after harm has already affected patient populations. This retrospective approach, while essential, inherently lags behind the manifestation of safety issues, creating an unavoidable gap between when risks emerge and when protective actions are taken.

Predictive pharmacovigilance represents a fundamental transformation in this paradigm. By leveraging artificial intelligence, machine learning, and vast repositories of real-world data, predictive approaches aim to identify safety risks before they manifest in widespread patient harm—shifting the discipline from damage assessment to damage prevention.

The transition from reactive to predictive drug safety is accelerating rapidly, driven by technological maturation and expanding data availability:

Real-World Data Integration Growth: Analysis of pharmaceutical industry investments shows that real-world data (RWD) integration into pharmacovigilance systems has grown 340% since 2020. Organizations now routinely incorporate electronic health records, insurance claims databases, patient registries, and wearable device data alongside traditional spontaneous reports—creating unprecedented opportunities for predictive modeling.

AI-Based Risk Prediction Accuracy: Recent validation studies of predictive pharmacovigilance models demonstrate substantial improvements over traditional methods:

Market and Regulatory Momentum: The predictive pharmacovigilance market is projected to grow from $240 million in 2024 to $890 million by 2030, representing a compound annual growth rate (CAGR) of 24.3%. This growth reflects both technological readiness and increasing regulatory acceptance of real-world evidence in safety evaluation.

Data Volume Enabling Prediction: The volume of health data available for predictive modeling has reached unprecedented scale:

Regulatory Evolution: Health authorities are increasingly receptive to predictive approaches. The FDA’s Sentinel System, launched in 2016 and continuously expanded, now actively queries data from over 225 million patients for proactive safety surveillance. The European Medicines Agency’s DARWIN EU initiative, operational since 2022, explicitly incorporates predictive analytics capabilities for anticipatory risk assessment.

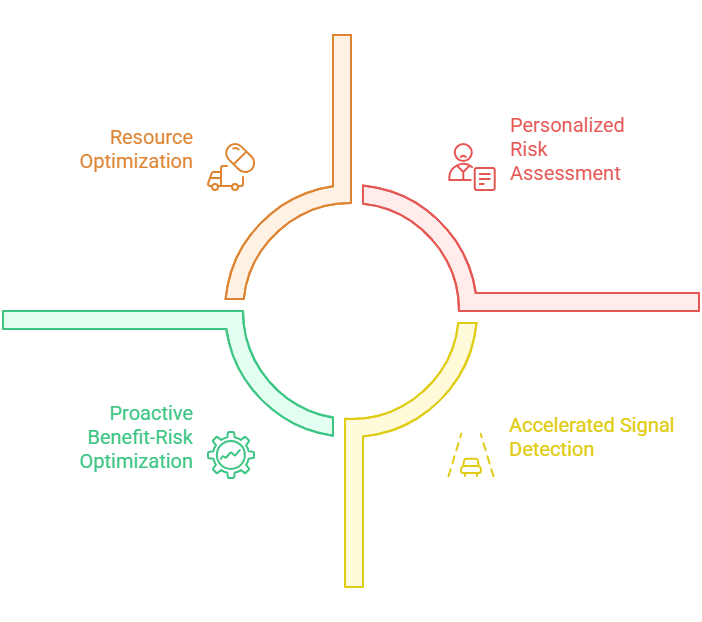

The shift to predictive approaches delivers measurable benefits across multiple dimensions:

Accelerated Signal Detection: Traditional signal detection relies on accumulating sufficient adverse event reports to achieve statistical significance—a process that can take months or years for rare events. Predictive models can identify elevated risk patterns from smaller datasets by incorporating diverse data sources and learning from historical signal evolution patterns, potentially preventing hundreds or thousands of patient exposures to unrecognized risks.

Personalized Risk Assessment: Rather than treating all patients as equally at risk, predictive models can stratify individual patient vulnerability based on genetic factors, comorbidities, concomitant medications, and demographic characteristics. This enables targeted risk mitigation for high-risk populations while avoiding unnecessary restrictions for low-risk groups.

Proactive Benefit-Risk Optimization: Predictive analytics enables continuous, real-time benefit-risk evaluation rather than periodic assessments. As new safety data accumulates and patient populations evolve, models can automatically recalculate benefit-risk profiles and alert when they shift unfavorably—enabling preemptive label updates or risk management adjustments.

Resource Optimization: By prioritizing investigation of high-probability risks identified through prediction, organizations can allocate limited pharmacovigilance resources more efficiently—focusing medical review, epidemiological studies, and regulatory communications on scenarios most likely to represent genuine safety concerns.

Regulatory Confidence: Demonstrating proactive risk identification through predictive analytics builds regulatory trust by showing organizations are ahead of safety issues rather than perpetually reacting to them. This proactive posture can influence regulatory decision-making regarding product approvals, label negotiations, and post-marketing requirements.

This introduction establishes that predictive pharmacovigilance is not speculative futurism but an emerging reality with quantifiable performance characteristics, growing market adoption, and regulatory acceptance—representing the next evolutionary stage in pharmaceutical safety science.

Leveraging Historical Data: The Foundation of Predictive Intelligence

Predictive pharmacovigilance models derive their forecasting capabilities fundamentally from patterns encoded in historical safety data. The quality, comprehensiveness, and appropriate utilization of legacy data directly determine predictive model performance and reliability.

Spontaneous Adverse Event Databases: Global safety databases contain decades of accumulated adverse event reports—the FDA’s FAERS database alone holds over 25 million Individual Case Safety Reports (ICSRs) dating back to 1969. These repositories capture:

Clinical Trial Safety Archives: Pharmaceutical companies maintain comprehensive clinical trial databases documenting adverse events observed in controlled research settings. Unlike spontaneous reports, clinical trial data offer:

Post-Marketing Surveillance History: Years of post-marketing safety experience reveal how risks evolve across product lifecycles:

Signal Evolution Learning: Predictive models analyze historical cases where initial weak signals evolved into confirmed safety risks, identifying distinguishing characteristics:

Pattern Recognition: Models learn that signals with certain characteristics—rapid reporting rate acceleration, consistent temporal relationships, clustering in specific patient subgroups, biologically plausible mechanisms—are more likely to represent true risks than statistical noise.

Early Warning Indicators: By studying the earliest reports in confirmed signal case series, models identify subtle features present before statistical significance was achieved—unusual event severity, distinctive narrative descriptions, specific concomitant medication patterns.

False Positive Discrimination: Equally important, models learn characteristics of signals that appeared concerning but ultimately proved spurious—helping distinguish true emerging risks from confounded associations, reporting artifacts, or coincidental temporal clustering.

Drug Class Safety Profiling: Historical data enables models to learn safety patterns characteristic of entire pharmacological classes:

Class Effect Prediction: When a new drug enters a class with established safety concerns (e.g., selective serotonin reuptake inhibitors and bleeding risk), models can predict elevated probability that the new agent shares those risks, prioritizing targeted monitoring.

Mechanism-Based Risk Forecasting: Drugs with similar mechanisms of action tend to exhibit related adverse event profiles. Models trained on mechanism-safety relationships can forecast likely risks for novel agents based on pharmacological properties.

Metabolic Pathway Considerations: Drugs metabolized via common pathways often share interaction risks and contraindications. Historical data linking metabolic characteristics to safety outcomes enables prediction for new molecular entities.

Addressing Historical Biases: Legacy safety databases contain inherent biases that must be recognized and mitigated:

Reporting Bias Evolution: Spontaneous reporting patterns change over time due to media attention, healthcare provider awareness, and regulatory communications. Models must account for the fact that current reporting patterns may differ systematically from historical periods.

Population Representation Gaps: Historical clinical trials often underrepresented women, elderly patients, pediatric populations, and racial/ethnic minorities. Predictive models trained exclusively on historical data may perform poorly for underrepresented groups.

Healthcare System Changes: Practice patterns, concomitant medication usage, and healthcare delivery models have evolved substantially over the decades. Historical safety patterns may not translate directly to current clinical contexts.

Data Harmonization Challenges: Integrating historical data from diverse sources requires addressing:

Terminology Evolution: Medical terminology and coding systems change over time—MedDRA undergoes version updates, ICD codes evolve, and clinical nomenclature shifts. Retrospective harmonization ensures consistent interpretation across temporal data.

Completeness Variations: Data completeness standards have improved over time. Recent reports typically contain more detailed patient information, dosing data, and outcome descriptions than legacy records.

Causality Assessment Consistency: Historical approaches to causality evaluation have varied across organizations and time periods. Standardizing or appropriately weighting causality judgments ensures valid model training.

Pharmacological Knowledge Integration: Combining safety databases with structured pharmacological information enhances predictive capability:

Drug Ontologies: Formal ontologies describing drug properties—molecular structure, targets, pharmacodynamics, pharmacokinetics—enable models to generalize from observed safety patterns to chemically or mechanistically similar compounds.

Protein Target Mapping: Linking drugs to their molecular targets and mapping target expression patterns across human tissues helps predict organ-specific toxicities based on biological principles.

Drug-Drug Interaction Databases: Historical pharmacokinetic and pharmacodynamic interaction data inform predictions about safety risks in patients taking multiple medications.

Biomedical Literature Mining: The published literature contains extensive safety evidence that can augment structured databases:

Case Report Extraction: Mining medical literature for published adverse event case reports captures detailed clinical descriptions often unavailable in regulatory databases.

Mechanistic Evidence: Preclinical studies, pharmacological research, and toxicology literature provide mechanistic insights that contextualize observed clinical safety patterns.

Systematic Review Synthesis: Meta-analyses and systematic reviews represent expert synthesis of safety evidence across multiple studies—valuable signals for model training.

Genetic and Biomarker Data: Pharmacogenomic research has identified genetic variants that modify drug safety risk:

Pharmacogenetic Risk Alleles: Known associations between genetic variants and adverse events (e.g., HLA-B*5701 and abacavir hypersensitivity) can be incorporated into predictive models for personalized risk assessment.

Biomarker Correlations: Historical data linking laboratory values or biomarkers to subsequent adverse event risk enables proactive monitoring recommendations.

By comprehensively leveraging historical safety data—properly harmonized, bias-adjusted, and enriched with external knowledge—predictive models develop the pattern recognition capabilities necessary to forecast future risks from emerging signals and patient characteristics.

Complementary Rather Than Replacement: The comparison reveals that predictive pharmacovigilance should augment rather than replace traditional approaches. Spontaneous reporting remains essential for capturing detailed clinical narratives and rare, unpredictable events, while predictive methods excel at early pattern detection across populations.

Data Infrastructure as Strategic Asset: The most fundamental difference lies in data foundation—traditional PV operates on thousands of spontaneous reports while predictive PV leverages millions of electronic health records and billions of healthcare transactions. Organizations investing in data integration infrastructure gain transformative capabilities.

Earlier Intervention, Greater Impact: The 8-12 week signal detection acceleration represents the core value proposition. In drug safety, earlier identification of risks—even by weeks—can prevent substantial patient harm and reduce regulatory scrutiny.

Resource Reallocation Opportunity: While predictive PV requires upfront investment, it enables strategic redeployment of pharmacovigilance professionals from routine case screening toward higher-value activities like causality investigation, risk-benefit evaluation, and strategic safety planning.

Maturity Gradient: Organizations should view this comparison as a maturity progression rather than a binary choice—beginning with enhanced analysis of traditional data sources using AI, gradually incorporating real-world data streams, and ultimately achieving fully integrated predictive surveillance.

This comparative framework helps stakeholders understand both the transformative potential and practical implementation considerations of transitioning toward predictive pharmacovigilance capabilities.

Real-Time Data Integration: The Fuel for Predictive Intelligence

Predictive pharmacovigilance’s forecasting power derives fundamentally from integrating diverse real-time data streams that provide comprehensive, longitudinal views of patient safety unavailable through traditional spontaneous reporting alone.

Clinical Depth and Longitudinal Coverage: EHR systems capture the complete patient clinical picture—diagnoses, laboratory results, vital signs, procedures, medication orders, and clinical notes—creating rich datasets for safety surveillance.

Safety Signal Advantages: EHR data enables identification of adverse events never spontaneously reported, detection of long latency reactions through years of follow-up, and correlation of adverse events with objective clinical measures rather than subjective reporting.

Integration Approaches: Organizations access EHR data through: (1) distributed query networks like FDA Sentinel that query multiple health systems without centralized data pooling, (2) claims-linked EHR databases combining billing data with clinical details, (3) direct partnerships with large health systems providing de-identified research datasets, and (4) patient-authorized data sharing through interoperability frameworks like FHIR.

Real-Time Predictive Applications: Machine learning models analyzing EHR data streams can:

Quantitative Impact: Studies comparing EHR-based surveillance to spontaneous reporting show EHR systems capture 12-35 times more adverse events, with particularly strong advantages for less dramatic events that don’t prompt reporting (mild hepatotoxicity, asymptomatic lab abnormalities, gradually developing conditions).

Implementation Challenges: Despite compelling advantages, EHR integration faces hurdles:

Population-Scale Exposure-Outcome Tracking: Claims databases document medication dispensing and healthcare utilization for millions of patients across extended timeframes—ideal for pharmacoepidemiological safety studies.

Unique Strengths: Claims data provides: (1) complete medication exposure history including all filled prescriptions, (2) temporal sequencing showing precise timing of drug exposure relative to diagnosed conditions, (3) healthcare resource utilization reflecting adverse event severity, (4) longitudinal follow-up often spanning years or decades, and (5) large sample sizes enabling detection of rare events.

Predictive Applications: AI models analyzing claims patterns can:

Limitations and Biases: Claims data depends on billing codes that may not capture clinical nuance, reflect only events prompting healthcare utilization (missing mild reactions), and may contain coding variations across providers and health systems.

Unfiltered Patient Perspectives: Social media platforms, patient forums, and health apps capture patient-reported experiences in real-time, complementing healthcare provider-generated data with patient voices.

Signal Detection Potential: Natural language processing of social media reveals:

Validation and Context: A 2023 analysis of social media pharmacovigilance found that AI models monitoring Twitter, Reddit, and patient forums identified confirmed adverse event signals an average of 3-8 weeks before appearance in regulatory databases for 62% of analyzed signals, demonstrating real predictive value despite inherent data quality limitations.

Methodological Considerations: Social media surveillance requires sophisticated approaches:

Continuous Physiological Surveillance: Consumer wearables, remote patient monitoring devices, and implantable sensors capture physiological parameters continuously—heart rate, rhythm, activity, sleep, blood glucose, blood pressure—enabling detection of adverse events through objective physiological signals.

Predictive Pharmacovigilance Applications:

Early Warning Capabilities: Machine learning models analyzing wearable data streams can identify subtle physiological changes preceding clinically apparent adverse events—potentially enabling intervention before serious harm occurs.

Integration Challenges: Wearable data presents unique hurdles:

Multi-Stream Integration Architecture: The true power of predictive pharmacovigilance emerges from integrating these diverse data streams into unified surveillance systems:

Complementary Strengths: EHRs provide clinical depth, claims data offer longitudinal population coverage, social media captures patient perspectives, and wearables deliver continuous physiological monitoring. Integrated analysis triangulates evidence across data sources, strengthening signal confidence.

Technical Infrastructure: Real-time integration requires:

Quantitative Impact of Integration: Research comparing single-source versus integrated surveillance demonstrates:

This real-time data integration transforms pharmacovigilance from a periodic review process into continuous, dynamic surveillance capable of identifying emerging risks and vulnerable patients before widespread harm occurs—the essence of predictive drug safety.

Predictive Models in Action: Frameworks for Early Risk Detection

Translating predictive pharmacovigilance concepts into operational reality requires specific modeling approaches tailored to different safety surveillance objectives. The following frameworks represent current best practices for early risk detection across key use cases.

Objective: Forecast which early drug-event associations will develop into confirmed safety signals requiring regulatory action versus which represent statistical noise or confounding.

Modeling Approach: Features: Reports Reporting pattern characteristics (velocity of new reports, report source diversity, geographic distribution); Patient demographics (age clusters, gender patterns); Event characteristics (severity distribution, time-to-onset patterns, dose-response evidence); Drug characteristics (mechanism of action, pharmacological class, chemical structure similarities to drugs with known safety issues); Literature evidence (published case reports, mechanism plausibility); Biological context (target organ expression patterns, known drug-target interactions)

Algorithm: Gradient boosting machines (XGBoost, LightGBM) or ensemble models combining multiple algorithms, trained on historical examples where early signals either confirmed as true risks or resolved as false positives

Output: Probability score (0-1) indicating likelihood a drug-event association represents genuine safety signal within 12 months; confidence intervals; contributing feature importance scores explaining prediction rationale

Performance Benchmarks:

Implementation Example: A pharmaceutical company monitoring a recently launched product identifies 15 potential safety signals from spontaneous reports after 6 months on market. Traditional methods cannot yet distinguish true risks from noise due to limited data. The signal evolution prediction model analyzes multi-dimensional features and assigns probability scores:

Regulatory Considerations: Signal evolution predictions support risk-based investigation prioritization but should not replace regulatory reporting obligations. Organizations must document prediction model validation, maintain audit trails of all predictions, and ensure human expert review of model outputs before decision-making.

Objective: Calculate personalized adverse event risk scores for patients being prescribed medications, enabling targeted monitoring and risk mitigation for vulnerable individuals.

Modeling Approach: Features: Patient demographics (age, sex, race/ethnicity); Comorbidity profile (chronic conditions, disease severity); Concomitant medications (polypharmacy, drug-drug interactions); Genetic/pharmacogenomic data (when available); Organ function parameters (renal function, hepatic function); Historical adverse event experience; Healthcare utilization patterns; Physiological parameters from wearables (when available)

Algorithm: Logistic regression for interpretability, random forests for improved performance, or neural networks for complex non-linear patterns; models trained separately for each specific adverse event of interest

Output: Individualized risk score (often expressed as predicted probability or risk category: low/moderate/high/very high); risk factor contributions showing which patient characteristics most influence the prediction; monitoring recommendations aligned with risk level

Performance Benchmarks:

Implementation Example: A health system implements patient risk stratification for anticoagulant-associated major bleeding:

Objective: Forecast when patients are likely to experience adverse events following medication initiation, enabling optimized monitoring schedules and early intervention windows.

Modeling Approach: Features: All features from individual risk stratification plus temporal patterns (typical time-to-onset distributions from historical data, dose escalation schedules, treatment duration effects)

Algorithm: Survival analysis methods (Cox proportional hazards models, random survival forests) or recurrent neural networks for time-series prediction

Output: Predicted time-to-event distribution showing probability of adverse event occurrence across time windows (weeks 1-4, months 2-3, months 4-6, etc.); hazard ratios for different patient characteristics; optimal monitoring schedule recommendations

Implementation Example: Predictive model for drug-induced liver injury anticipates highest risk period occurs 4-12 weeks after treatment initiation for a specific medication. Health system implements:

Objective: Identify high-risk medication combinations likely to cause adverse events through pharmacokinetic or pharmacodynamic interactions, especially for novel combinations not yet documented in interaction databases.

Modeling Approach: Features: Drug pharmacological properties (metabolism pathways, enzyme inhibition/induction, protein binding, QT effects, CNS effects); Molecular structure similarities; Known interaction patterns for chemically or mechanistically similar drugs; Patient factors modifying interaction risk (age, organ function, genetic variants)

Algorithm: Graph neural networks representing drugs as nodes and interactions as edges; deep learning models identifying interaction patterns from molecular structures; knowledge graph embeddings incorporating pharmacological relationships

Output: Interaction risk score for drug pairs or multi-drug combinations; predicted interaction mechanism; severity classification; patient population modifier effects

Performance Benchmarks:

Implementation Example: Patient prescribed new medication with extensive polypharmacy (8 concurrent medications). The interaction prediction model analyzes all pairwise and three-way combinations:

Deployment Considerations:

Performance Monitoring:

These frameworks demonstrate that predictive pharmacovigilance has progressed beyond conceptual aspiration to operational reality, with defined methodologies, quantifiable performance characteristics, and evidence of real-world impact in early risk detection and patient protection.

Challenges and Opportunities: Navigating the Path to Predictive Maturity

While predictive pharmacovigilance offers transformative potential, realizing that potential requires addressing substantial technical, operational, and regulatory challenges. Understanding these obstacles alongside emerging opportunities enables organizations to develop realistic implementation roadmaps.

The Black Box Problem: Many high-performing machine learning algorithms—deep neural networks, gradient boosting ensembles, support vector machines—operate as “black boxes” that provide accurate predictions without readily interpretable decision logic. This opacity creates significant challenges in pharmacovigilanc,e where understanding why a prediction was made is nearly as important as the prediction itself.

Regulatory and Clinical Requirements for Transparency:

Approaches to Achieving Explainability:

SHAP (SHapley Additive exPlanations): Game theory-based method that assigns each input feature a contribution value showing how much it influenced a specific prediction. For a patient risk score, SHAP reveals which factors (age, comorbidities, concomitant medications) most strongly drove the risk assessment.

LIME (Local Interpretable Model-Agnostic Explanations): Creates simplified, interpretable models that approximate complex model behavior in the local region around specific predictions, enabling case-by-case explanation even for black-box algorithms.

Attention Mechanisms: Neural network architectures that explicitly highlight which input features the model “focused on” when making predictions—visualizing which portions of clinical notes or which patient characteristics received highest attention weights.

Rule Extraction: Deriving human-readable decision rules from trained models—for example, “IF age > 75 AND creatinine clearance < 30 AND taking antiplatelet agent THEN high bleeding risk”—that approximate model logic.

Counterfactual Explanations: Showing what would need to change about a patient or case for the prediction to differ—”This patient was classified as high risk; if creatinine clearance improved to >50 ml/min, risk would decrease to moderate category.”

Balancing Performance and Interpretability: Research consistently shows that more interpretable models (linear regression, decision trees) often underperform complex black-box algorithms by 5-15% in predictive accuracy. Organizations must navigate this trade-off based on use case criticality—tolerating some performance sacrifice for interpretability in patient-facing applications while accepting black-box models for internal prioritization where human review follows.

Heterogeneous Data Standards: Real-world data sources employ diverse terminologies, formats, and quality standards that complicate integration:

Terminology Mapping Complexity: EHRs use ICD codes, SNOMED CT, and LOINC; spontaneous reports employ MedDRA; claims data uses ICD and CPT codes; social media uses unstructured lay language. Harmonizing these requires sophisticated natural language processing and ontology mapping—with inevitable information loss and ambiguity.

Temporal Granularity Variations: Wearable devices capture minute-by-minute physiological data; EHRs document clinical encounters; claims reflect monthly billing cycles; spontaneous reports provide imprecise dates. Aligning these temporal resolutions for integrated analysis introduces uncertainty.

Completeness and Missing Data: Real-world data suffers from systematic missingness—patients switch health systems (censoring longitudinal follow-up), wearables are removed during sleep or charging, social media users delete posts, laboratory tests are ordered selectively rather than systematically. Predictive models must handle missing data appropriately without introducing bias.

Data Quality Assurance:

Validation Pipelines: Implement automated data quality checks identifying outliers, logical inconsistencies, duplicate records, and implausible values before feeding data to predictive models.

Source Reliability Weighting: Not all data sources deserve equal confidence—EHR-documented adverse events typically have a higher positive predictive value than social media mentions. Models should incorporate source reliability scores, adjusting for data quality variations.

Ground Truth Establishment: Validating predictive model accuracy requires gold-standard “ground truth” outcomes—but determining true adverse event occurrence from noisy real-world data is inherently uncertain. Organizations must establish expert adjudication protocols, creating validated outcome datasets for model training and testing.

Privacy, Consent, and Data Governance:

Regulatory Compliance: GDPR in Europe, HIPAA in the United States, and similar privacy regulations worldwide impose strict requirements on health data usage. Predictive pharmacovigilance must navigate: obtaining appropriate patient consent or establishing a legitimate interest basis for data processing, implementing robust de-identification to prevent patient re-identification, restricting data access to authorized personnel, and providing audit trails documenting data usage.

Ethical Considerations: Beyond legal compliance, ethical questions arise: Should patients be informed that their de-identified data contributes to safety surveillance algorithms? What transparency obligations exist regarding how individual risk predictions are calculated? How should incidental findings from predictive models be handled—if an algorithm predicts high adverse event risk for a patient, who should be notified and when?

Data Partnerships and Agreements: Accessing diverse data sources requires complex legal agreements with health systems, insurers, and technology companies—each with their own data governance requirements, business interests, and risk tolerance. Negotiating sustainable data partnerships represents substantial operational overhead.

Validation Complexity for Adaptive Models: Traditional pharmacovigilance tools undergo one-time validation with fixed algorithms. Predictive AI models that continuously learn from new data create validation challenges:

When Does a Model Change Enough to Require Revalidation? If a model is retrained quarterly, incorporating new data, is each version a new system requiring full validation? Industry consensus is emerging that substantial architectural changes require comprehensive revalidation, while parameter updates from retraining on similar data require abbreviated validation demonstrating continued performance.

Documenting Performance Across Populations: Regulatory authorities expect evidence that models perform equitably across demographic subgroups. Validation must specifically assess performance in elderly patients, pediatric populations, racial/ethnic minorities, and other potentially vulnerable groups—requiring large, diverse test datasets.

Regulatory Guidance Evolution: Health authority guidance on AI in pharmacovigilance remains nascent:

Current Landscape: FDA’s AI/ML guidance addresses medical devices and diagnostic algorithms but provides limited specific direction for pharmacovigilance applications. EMA’s reflections on AI emphasize transparency and human oversight without prescriptive requirements. Health Canada and other authorities have issued general AI principles but sparse details on safety surveillance.

Documentation Expectations: Despite limited specific guidance, regulatory inspectors increasingly request: model validation protocols and results, training data characterization, performance monitoring evidence, procedures for human review of predictions, change control for model updates, and contingency plans for model failures.

Establishing Evidence Standards: The industry must develop consensus on evidence standards for predictive pharmacovigilance:

What Performance Threshold Justifies Deployment? Should predictive models demonstrate superiority to existing methods, or is non-inferiority sufficient? What sensitivity/specificity balance is appropriate for different use cases?

How Much Real-World Validation Is Necessary? Before trusting a model for patient care decisions, how much prospective real-world performance evidence should be accumulated?

Comparative Effectiveness Requirements: Should organizations conduct head-to-head comparisons of competing predictive models to justify vendor selection, similar to clinical trial comparisons of competing therapies?

Despite these challenges, emerging technological and methodological advances create substantial opportunities:

Federated Learning for Privacy-Preserving Collaboration: Federated learning enables training predictive models on distributed datasets without centralizing sensitive patient data—algorithms travel to data rather than data traveling to algorithms. This approach could enable industry-wide collaborative model development while maintaining organizational data sovereignty and patient privacy.

Transfer Learning for Rare Events: Transfer learning techniques allow models trained on common adverse events to be adapted for rare events with limited data—leveraging learned representations from data-rich scenarios to improve performance in data-sparse situations.

Large Language Models for Narrative Analysis: Recent advances in large language models (GPT-4, Claude, domain-specific medical LLMs) dramatically improve the extraction of structured information from unstructured clinical narratives, adverse event descriptions, and literature—enabling more comprehensive feature engineering for predictive models.

Causal Inference Integration: Combining predictive machine learning with causal inference methods (propensity score matching, instrumental variables, Mendelian randomization) enables models to move beyond correlation toward causal understanding—addressing the fundamental question of whether drugs actually cause adverse events versus merely being correlated with them.

Quantum Computing Potential: Though nascent, quantum computing could eventually enable real-time analysis of molecular interactions at scale, dramatically improving drug-drug interaction and toxicity predictions based on first-principles molecular simulation rather than empirical pattern recognition.

Regulatory Harmonization Initiatives: International harmonization efforts (ICH, WHO) are beginning to address AI in pharmacovigilance, potentially establishing global standards that reduce regulatory fragmentation and validation burden.

By systematically addressing challenges while capitalizing on opportunities, the pharmaceutical industry can advance predictive pharmacovigilance from a promising concept to a standard practice—fundamentally transforming drug safety from reactive damage control to proactive patient protection.

Conclusion: Envisioning a Proactive AI-Driven Safety Ecosystem

The evolution of pharmacovigilance from reactive surveillance to predictive intelligence represents more than technological advancement—it embodies a fundamental philosophical shift toward proactive patient protection. The quantitative evidence demonstrates that this transformation is already underway: 8-12 week acceleration in signal detection, 76-84% accuracy in forecasting true versus spurious signals, 12-35x greater adverse event capture through real-world data integration, and 45-60% improvement in detection sensitivity through multi-source data fusion.

The Emerging Predictive Safety Ecosystem:

Imagine the pharmacovigilance landscape five years hence: A patient receives a new prescription, and within seconds, an AI system integrating their complete clinical profile, genomic data, and real-time physiological signals from wearable devices calculates personalized adverse event risk scores across dozens of potential reactions. High-risk predictions trigger automated alerts recommending alternative therapies, dose adjustments, or enhanced monitoring protocols—before the first tablet is dispensed.

Simultaneously, population-level surveillance algorithms continuously analyze millions of electronic health records, insurance claims, and social media health discussions in real-time. When subtle patterns emerge suggesting a potential safety signal—perhaps a slight increase in cardiac arrhythmia diagnoses among patients initiating a specific medication—the system flags this association weeks before sufficient spontaneous reports accumulate for traditional statistical significance. Safety teams receive prioritized alerts only for associations the AI predicts have high probability of representing true risks, focusing expert review where it matters most.

Pharmaceutical companies proactively detect emerging risks in early post-marketing experience, initiating targeted epidemiological studies and implementing risk mitigation measures before widespread patient harm occurs. Regulatory agencies access shared predictive analytics platforms providing real-time benefit-risk profiles across marketed products, enabling data-driven regulatory decisions rather than reactive responses to safety crises.

Realizing the Vision:

Achieving this proactive ecosystem requires coordinated action across multiple fronts:

Industry Investment in Data Infrastructure: Organizations must prioritize integration of diverse real-world data sources—EHRs, claims, wearables, patient-generated data—into unified analytics platforms. This infrastructure represents strategic competitive advantage and improved patient safety simultaneously.

Methodological Advancement: Continued research addressing explainability, causal inference, handling of rare events, and equity across populations will mature predictive methods from promising prototypes to validated, trustworthy tools meeting the exacting standards of pharmaceutical safety demands.

Regulatory Engagement: Proactive dialogue between industry and health authorities can establish clear validation requirements, performance benchmarks, and documentation standards that provide regulatory clarity while fostering innovation. Collaborative initiatives developing consensus guidelines will accelerate adoption by reducing uncertainty.

Workforce Transformation: Pharmacovigilance professionals must evolve from primarily case processors to AI-augmented safety scientists—validating model predictions, investigating flagged signals, and making strategic benefit-risk decisions informed by predictive analytics. Organizations should invest in training programs to develop these hybrid competencies.

Ethical Framework Development: As predictive models increasingly influence patient care, the industry must establish ethical frameworks addressing: transparency about algorithmic decision-making, equity in model performance across populations, privacy protection in data aggregation, and accountability when predictions prove inaccurate.

Collaborative Learning: Industry-wide sharing of anonymized validation data, best practices, and lessons learned would accelerate collective maturity in predictive pharmacovigilance—similar to how the pharmaceutical industry collaborates on manufacturing standards and quality systems.

The Ultimate Goal: Zero Preventable Harm:

Predictive pharmacovigilance aims toward an aspirational but worthy goal—a future where no patient experiences preventable drug-induced harm because risks were identified and mitigated before exposure occurred. While perfect prediction may remain elusive, each week of earlier signal detection, each high-risk patient identified before an adverse event, and each spurious signal correctly deprioritized to focus resources on genuine risks represents tangible progress toward that vision.

The pharmaceutical industry stands at an inflection point where the convergence of vast health data, mature AI algorithms, increasing regulatory acceptance, and demonstrated performance benefits creates unprecedented opportunity to transform drug safety. Organizations embracing predictive approaches strategically—with appropriate investment, realistic timelines, rigorous validation, and commitment to transparency—will not only gain competitive advantage but fulfill the fundamental mission of pharmacovigilance: protecting patients from medication-related harm.

The transition from reactive to predictive pharmacovigilance is not a question of if but when—and for organizations committed to patient safety, the answer should be now.