The integration of artificial intelligence into pharmacovigilance workflows has transformed how organizations detect, assess, and manage adverse drug reactions. However, the deployment of AI models in drug safety systems comes with significant responsibility. Unlike traditional software, AI models learn from data and can produce variable outputs that directly impact patient safety decisions. This makes robust validation and continuous monitoring not just technical necessities, but regulatory and ethical imperatives.

Model validation ensures that AI systems perform accurately, reliably, and consistently before deployment. Monitoring extends this assurance throughout the model’s operational lifecycle, detecting performance degradation, data drift, and emerging biases. Together, these practices form the foundation of trustworthy AI in pharmacovigilance, enabling organizations to harness automation while maintaining the highest standards of drug safety surveillance.

Pharmacovigilance AI systems process sensitive safety data to identify adverse events, classify case seriousness, detect signals, and support regulatory reporting. Errors or inconsistencies in these systems can lead to missed safety signals, incorrect case classifications, delayed regulatory submissions, or false alerts that overwhelm safety teams. The stakes are particularly high because these systems directly influence decisions that affect patient health and regulatory compliance.

Validation serves multiple critical functions in this context. It provides evidence that the AI model performs as intended across diverse scenarios, including edge cases and rare adverse events. It establishes baseline performance metrics that enable ongoing quality assurance and regulatory scrutiny. Validation also builds organizational confidence in AI outputs, facilitating appropriate human-AI collaboration rather than blind automation or complete skepticism.

From a regulatory perspective, validation creates the documentation trail required by health authorities. The FDA, EMA, and other regulatory bodies increasingly expect evidence-based assurance that AI systems meet quality standards comparable to traditional pharmacovigilance processes. Without thorough validation, organizations risk non-compliance, regulatory findings during inspections, and potential liability if AI-driven decisions contribute to patient harm.

Pro Tip: Treat validation as an investment in trust. Comprehensive validation documentation not only satisfies regulators but also empowers your safety teams to use AI confidently and appropriately.

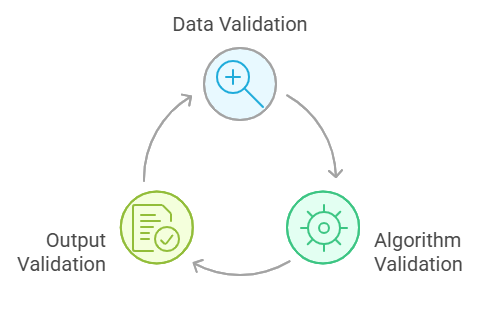

Effective validation encompasses multiple stages that examine different aspects of an AI system’s performance. Each stage addresses specific risks and quality dimensions, from data quality to algorithmic behavior to output usability. Organizations should approach validation systematically, documenting methodologies, results, and decisions at each stage to create a complete quality assurance record.

Data validation forms the foundation of AI system quality because model performance cannot exceed the quality of training and operational data. This stage examines whether the data used to develop and deploy the AI system accurately represents the target pharmacovigilance domain, contains sufficient diversity to handle varied scenarios, and maintains appropriate quality standards.

Training data validation begins with assessing representativeness. Do the historical cases used for training reflect the full spectrum of adverse events, product types, reporter categories, and data quality levels the system will encounter in production? Imbalanced datasets that overrepresent common scenarios while underrepresenting rare but serious events can produce models that perform poorly on critical edge cases.

Data quality assessment examines completeness, consistency, accuracy, and timeliness of source data. Missing critical fields, inconsistent terminology, data entry errors, and outdated information all degrade model performance. Organizations must also validate that data annotation for supervised learning reflects true expert consensus rather than individual annotator bias or inconsistent guidelines.

Privacy and security validation ensure that data handling complies with regulations like GDPR, HIPAA, and ICH guidelines. This includes verifying de-identification procedures, access controls, data retention policies, and cross-border transfer safeguards. In pharmacovigilance, protecting patient privacy while maintaining data utility for safety surveillance requires careful validation of anonymization techniques.

Algorithm validation examines the AI model’s internal behavior, decision logic, and performance characteristics. This stage moves beyond simple accuracy metrics to understand how the model arrives at conclusions, where it performs well or poorly, and whether its behavior aligns with pharmacovigilance principles and domain knowledge.

Performance validation quantifies the model’s accuracy, precision, recall, specificity, and other relevant metrics across multiple test datasets. These datasets should include held-out test sets from the training data distribution, temporal validation sets from different time periods, and external validation sets from different sources or populations. Consistent performance across these datasets indicates robust generalization rather than overfitting to training data patterns.

For classification tasks common in pharmacovigilance—such as adverse event detection, seriousness assessment, or causality evaluation—confusion matrices reveal where the model makes specific types of errors. Understanding whether false negatives cluster around particular event types or false positives concentrate in specific product categories enables targeted improvement and appropriate human oversight strategies.

Explainability validation assesses whether the model’s reasoning can be understood and verified by pharmacovigilance experts. For complex models like deep learning systems, this may involve validating attention mechanisms, feature importance rankings, or counterfactual explanations. The goal is to ensure that model decisions align with scientific and medical reasoning rather than spurious correlations in training data.

Bias and fairness validation examines whether the model performs equitably across patient demographics, reporter types, product categories, and other relevant subgroups. Systematic performance differences may indicate training data biases, algorithmic biases, or genuine underlying population differences that require appropriate handling in safety surveillance workflows.

Output validation evaluates the usability, interpretability, and integration of AI system outputs within pharmacovigilance workflows. Even technically accurate models can fail in practice if their outputs are poorly formatted, difficult to interpret, or incompatible with existing systems and processes.

Format and integration validation ensure that AI outputs interface correctly with downstream systems, including case management databases, signal detection platforms, and regulatory submission tools. This includes validating data formats, API integrations, error handling, and system interoperability under various operational conditions.

Clinical and regulatory validation involves pharmacovigilance experts reviewing AI outputs to confirm clinical plausibility, regulatory relevance, and alignment with safety assessment principles. This stage often reveals subtle issues that quantitative metrics miss, such as inappropriate context interpretation, clinically implausible associations, or outputs that technically match labels but misalign with nuanced safety assessment requirements.

User acceptance validation examines whether safety professionals can effectively use and trust the AI system in their daily workflows. This involves usability testing, workflow integration assessment, and validation that the system enhances rather than hinders human decision-making. As discussed in AI in Pharmacovigilance: Knowing When to Say No, not all technically valid AI systems should be deployed if they create workflow problems or reduce overall safety surveillance quality.

Validation establishes initial quality assurance, but AI model performance can degrade over time due to data drift, concept drift, and changing operational conditions. Continuous monitoring creates an ongoing quality assurance process that detects performance changes, triggers interventions, and maintains long-term system reliability.

Data drift occurs when the statistical properties of input data change over time. In pharmacovigilance, this might manifest as changes in reporting patterns, new adverse event terminologies, evolving product portfolios, or shifts in reporter demographics. Even if the underlying relationship between inputs and outputs remains stable, data drift can degrade model performance if the model encounters input distributions significantly different from the training data.

Concept drift represents changes in the actual relationship between inputs and outputs. In drug safety, concept drift might occur when new adverse events emerge for established products, when regulatory definitions change, or when medical understanding of causality relationships evolves. Concept drift is particularly challenging because it represents genuine changes in the target phenomenon rather than just data distribution shifts.

Effective monitoring systems track multiple performance indicators continuously. Primary metrics include the same accuracy, precision, and recall measures used during validation, calculated on ongoing operational data with verified ground truth. Secondary indicators include data distribution metrics that can signal drift before performance degradation becomes evident, such as feature distribution changes, prediction confidence distributions, and input data quality metrics.

Establishing monitoring thresholds and alert mechanisms requires balancing sensitivity and specificity. Overly sensitive thresholds generate excessive false alarms that erode attention and trust. Insufficiently sensitive thresholds allow significant performance degradation before detection. Organizations should define threshold ranges based on the criticality of specific AI functions, validation performance variability, and available resources for investigating alerts.

When monitoring detects significant drift or performance degradation, organizations must have defined response protocols. These may include increasing human review rates for AI outputs, restricting AI system use to lower-risk functions, initiating model retraining or recalibration, or temporarily suspending AI functionality while investigating root causes. The response should be proportionate to the detected issue’s severity and potential patient safety impact.

Insight: Implement layered monitoring that tracks leading indicators (data distribution changes) and lagging indicators (actual performance metrics). Leading indicators enable proactive intervention before patient safety impacts occur.

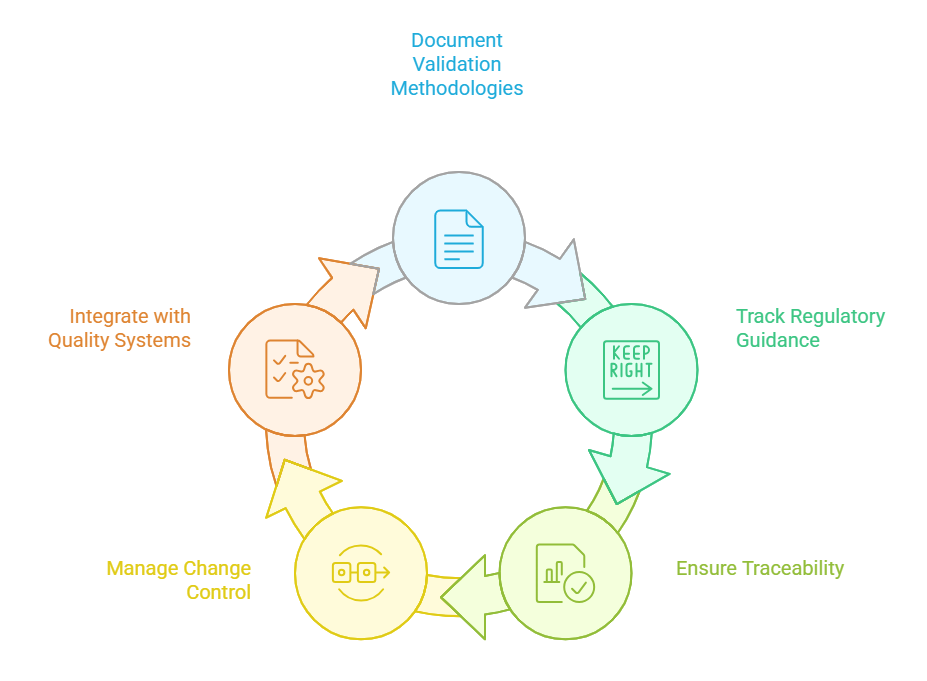

Regulatory expectations for AI in pharmacovigilance continue to evolve, but core principles remain consistent: organizations must demonstrate that AI systems are fit for purpose, properly validated, continuously monitored, and appropriately integrated into quality management systems. Audit readiness requires comprehensive documentation, traceability, and governance structures that withstand regulatory scrutiny.

Documentation standards for AI validation should address what was validated, how validation was performed, what results were obtained, and what decisions were made based on validation findings. This includes detailed descriptions of validation datasets, performance metrics, acceptance criteria, identified limitations, and mitigation strategies for known issues. Documentation must enable an external auditor to understand and verify the validation process without requiring access to proprietary algorithms or training data.

Regulatory frameworks increasingly address AI and automated systems in pharmacovigilance. ICH E2B(R3) and related guidelines establish expectations for data quality and processing in individual case safety reports. The FDA’s guidance on AI and machine learning in medical devices provides relevant principles for validation and monitoring. European Medicines Agency reflection papers on AI in regulatory submissions indicate growing expectations for transparency and validation of evidence. Organizations should track evolving guidance and ensure validation approaches align with current regulatory thinking, as detailed in Choosing AI Solutions in Pharmacovigilance.

Traceability requirements extend throughout the AI system lifecycle. Organizations must maintain version control for training data, model architectures, training procedures, validation results, deployment configurations, and monitoring outputs. When regulators question a specific AI-generated output during an inspection, the organization should be able to trace back to the exact model version, training data version, and validation results applicable at that time.

Change control and configuration management apply to AI systems just as they do to traditional GxP systems. Organizations must define what constitutes a change requiring revalidation—such as model architecture modifications, training data updates, or parameter adjustments—versus changes that can proceed with lighter verification, such as infrastructure updates that don’t affect model behavior. The change control process should include impact assessment, appropriate validation or verification activities, documentation updates, and formal approval before implementation.

Quality management integration ensures that AI validation and monitoring fit within broader pharmacovigilance quality systems. This includes connecting AI system quality to standard operating procedures, training programs, deviation management, corrective and preventive action (CAPA) systems, and periodic quality reviews. AI systems should be subject to the same quality culture and oversight expectations as other critical pharmacovigilance functions.

Organizations implementing AI in pharmacovigilance frequently encounter predictable challenges that can be avoided with proper planning and risk awareness. Learning from common pitfalls enables more successful AI validation and monitoring programs.

Insufficient validation scope represents a frequent shortcoming where organizations validate only on best-case scenarios or limit validation to single performance metrics. Comprehensive validation must examine edge cases, rare events, poor-quality inputs, and system behavior under stress conditions. Single metrics like overall accuracy can mask serious weaknesses in specific subpopulations or event types critical to patient safety.

Disconnected validation and operations occur when validation uses different data, configurations, or workflows than the actual deployment. Validation must test the complete system as it will operate in production, including data preprocessing, integration points, user interfaces, and error handling. Laboratory validation using cleaned datasets and simplified workflows may not predict real-world performance under operational conditions.

Static validation without ongoing monitoring creates false confidence that initial validation ensures perpetual quality. As discussed earlier, drift and changing conditions require continuous monitoring. Organizations should establish monitoring as a core operational function rather than an optional enhancement.

Inadequate human oversight emerges when organizations treat validation as a purely technical exercise without sufficient pharmacovigilance expert involvement. Domain experts must define validation requirements, interpret results, assess clinical plausibility, and make risk-based decisions about AI system deployment and constraints. Technical validation teams alone cannot assess whether AI behavior aligns with pharmacovigilance principles and patient safety imperatives.

Poor documentation and traceability manifest when organizations conduct validation activities but fail to document them comprehensively or maintain traceability across the AI lifecycle. Retrospectively reconstructing validation evidence for regulatory inspections is extremely difficult and undermines audit readiness.

Best practices for validation and monitoring include establishing cross-functional validation teams that combine AI technical expertise, pharmacovigilance domain knowledge, quality assurance experience, and regulatory affairs insight. Define clear validation protocols before beginning validation activities, including specific test scenarios, acceptance criteria, and decision rules for different findings.

Implement validation as an iterative process where initial findings inform model improvements, refined validation approaches, or adjusted deployment strategies. Plan for revalidation triggered by model changes, significant drift detection, regulatory requirement updates, or periodic scheduled intervals.

Create validation and monitoring reports that communicate clearly to non-technical stakeholders, including senior management, quality oversight functions, and regulatory inspectors. Reports should explain what was tested, what was found, what it means for patient safety and regulatory compliance, and what actions are recommended or required.

Model validation and continuous monitoring form the cornerstone of responsible AI deployment in pharmacovigilance. These practices transform AI from a potentially risky black box into a transparent, accountable tool that enhances drug safety surveillance while maintaining regulatory compliance and patient protection as paramount priorities.

Effective validation examines AI systems comprehensively across data quality, algorithmic behavior, and operational integration. It provides evidence that AI performs accurately and reliably while identifying limitations that require mitigation through human oversight or operational constraints. Continuous monitoring extends this assurance throughout the AI lifecycle, detecting performance degradation and triggering appropriate interventions before patient safety impacts occur.

Organizations that invest in robust validation and monitoring capabilities position themselves to harness AI’s potential in pharmacovigilance while managing its risks appropriately. These practices build internal confidence, satisfy regulatory expectations, and ultimately support the fundamental mission of pharmacovigilance: protecting patients through vigilant safety surveillance. As AI capabilities and regulatory frameworks continue evolving, validation and monitoring excellence will increasingly differentiate organizations that successfully integrate AI from those that struggle with implementation challenges or regulatory setbacks.