As artificial intelligence systems increasingly automate critical pharmacovigilance functions—from adverse event classification to signal detection and causality assessment—a fundamental question emerges: can safety professionals trust decisions made by algorithms they cannot understand? Black-box AI models, particularly deep learning architectures, deliver impressive predictive accuracy but offer little insight into their reasoning processes. This opacity creates significant barriers to adoption in an industry where accountability, regulatory compliance, and patient safety are paramount.

Explainable AI (XAI) addresses this challenge by making AI decision-making processes transparent and interpretable. Rather than accepting algorithmic outputs on faith, XAI techniques reveal which data features influenced predictions, how different variables interacted, and why specific classifications were made. For pharmacovigilance teams navigating regulatory scrutiny, managing liability concerns, and maintaining scientific rigor, XAI represents the bridge between powerful automation and practical deployment.

The integration of XAI into pharmacovigilance workflows is not merely a technical enhancement—it is becoming a regulatory expectation and an operational necessity for organizations seeking to responsibly leverage AI capabilities.

Pharmacovigilance operates under stringent regulatory oversight where every safety decision must be defensible, auditable, and scientifically sound. When an AI model flags a potential safety signal or classifies an adverse event as serious, safety officers need to understand the basis for that determination. Regulatory submissions, signal evaluation reports, and causality assessments require explicit reasoning that can withstand scientific and legal scrutiny.

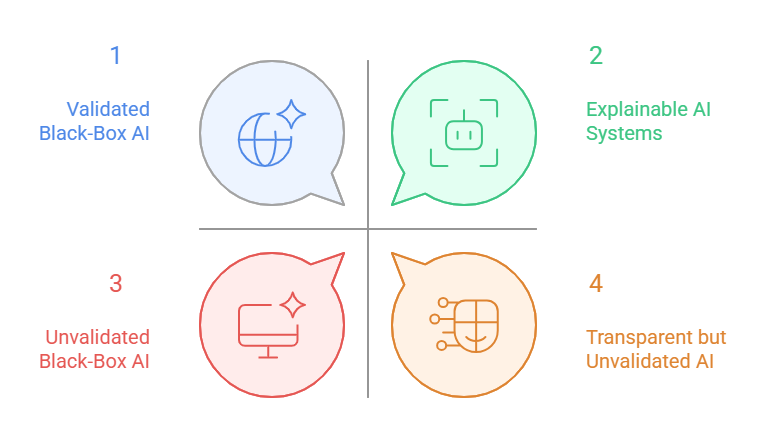

Traditional black-box models present multiple problems in this context. A deep neural network might achieve 95% accuracy in predicting adverse event severity, but if it cannot explain why a specific case was classified as life-threatening, that prediction has limited practical value. Safety reviewers cannot validate the assessment, regulators cannot audit the decision logic, and healthcare providers cannot integrate the finding into clinical practice with confidence.

The stakes extend beyond operational efficiency. Incorrect AI predictions in pharmacovigilance can delay critical safety actions, trigger unnecessary market withdrawals, or fail to identify genuine patient risks. Without explainability, identifying when and why models fail becomes nearly impossible. As explored in “AI in Pharmacovigilance: Knowing When to Say No,” recognizing the limitations and failure modes of AI systems is essential for responsible implementation.

Trust building represents another critical dimension. Pharmacovigilance professionals bring decades of expertise in pharmacology, epidemiology, and clinical medicine. For these experts to accept AI as a collaborative tool rather than viewing it as a threat or liability, they must be able to interrogate model reasoning, verify it against their domain knowledge, and override it when appropriate. XAI facilitates this human-AI partnership by making algorithmic reasoning accessible to domain experts.

Regulatory agencies worldwide are codifying these expectations. The FDA, EMA, and ICH are developing frameworks that increasingly require transparency and interpretability for AI systems used in drug safety applications. As detailed in “Latest EMA, FDA and ICH Guidelines Regarding Use of AI in Pharmacovigilance,” explainability is transitioning from a desirable feature to a regulatory requirement.

Pro Tip: When evaluating AI vendors or building internal systems, treat explainability as a first-class requirement alongside accuracy. A slightly less accurate model that provides clear reasoning is often more valuable in pharmacovigilance than a marginally better black-box alternative.

Multiple technical approaches have emerged to make AI models interpretable, each with distinct strengths and applicability to pharmacovigilance use cases. Understanding these techniques enables informed selection and implementation based on specific operational requirements.

SHAP (SHapley Additive exPlanations) represents one of the most theoretically grounded and widely adopted XAI techniques. Derived from cooperative game theory, SHAP values quantify each input feature’s contribution to a specific prediction by calculating how much that feature changes the expected model output.

In pharmacovigilance applications, SHAP can reveal which patient characteristics, medication details, or clinical factors most strongly influenced an adverse event classification. For example, when an AI model classifies a case report as indicating hepatotoxicity, SHAP analysis might show that elevated liver enzymes contributed 40% of the prediction confidence, concurrent medication use contributed 25%, and patient age contributed 15%, with remaining factors accounting for the balance.

The technique works with any machine learning model, from simple logistic regression to complex ensemble methods and neural networks. This model-agnostic property is particularly valuable in pharmacovigilance, where organizations may deploy diverse AI architectures across different safety monitoring tasks.

SHAP provides both local explanations—why a specific prediction was made for an individual case—and global explanations that summarize feature importance across entire datasets. This dual capability supports both case-level review and systematic model validation. Safety teams can examine individual adverse event assessments for clinical plausibility while also evaluating whether the model’s overall decision patterns align with pharmacological principles.

Computational intensity represents SHAP’s primary limitation. Calculating exact SHAP values for complex models with many features can be time-consuming, though approximation methods like TreeSHAP and KernelSHAP offer practical solutions with acceptable accuracy-speed tradeoffs.

LIME (Local Interpretable Model-agnostic Explanations) takes a different approach to explainability by approximating complex model behavior with simpler, interpretable models in localized regions of the feature space. When applied to a specific prediction, LIME perturbs the input data, observes how predictions change, and fits an interpretable linear model to these perturbed samples.

For pharmacovigilance text analysis—such as adverse event narrative classification—LIME is particularly effective. When an AI model categorizes a case narrative as describing anaphylaxis, LIME can highlight which words or phrases in the text most strongly influenced that classification. Terms like “facial swelling,” “difficulty breathing,” or “epinephrine administration” might be identified as key contributors, allowing safety reviewers to quickly assess whether the model focused on clinically relevant information.

The technique’s model-agnostic nature and computational efficiency make it practical for real-time applications. As safety professionals review AI-assisted case classifications, LIME explanations can be generated on demand without significant latency.

However, LIME’s local approximation approach introduces certain limitations. Explanations apply only to the immediate vicinity of a specific prediction and may not accurately represent model behavior in other regions of the feature space. The perturbation strategy and the choice of interpretable surrogate model also introduce parameters that can affect explanation quality and stability.

In pharmacovigilance workflows, LIME excels at supporting case-by-case review where safety officers need rapid, intuitive explanations of individual predictions. For systematic model validation or identifying global biases, complementary techniques like SHAP or feature importance analysis provide more comprehensive insights.

Attention mechanisms, originally developed for natural language processing and computer vision, offer inherent explainability by revealing which input elements a model focuses on when making predictions. In attention-based neural networks, the model explicitly learns to assign importance weights to different parts of the input, and these weights can be visualized as explanations.

For pharmacovigilance applications involving sequential or text data—adverse event narratives, medical literature, or temporal patient records—attention mechanisms are particularly powerful. When an attention-based model processes a case narrative to predict adverse event outcomes, the attention weights show which sentences or medical terms most influenced the prediction.

Unlike post-hoc explanation methods like SHAP or LIME, attention weights are an intrinsic part of the model architecture and prediction process. This integration means explanations are computationally cheap to generate and guaranteed to reflect the actual information the model used for its decision.

Transformer architectures, which use multi-head self-attention, have become dominant in natural language processing tasks relevant to pharmacovigilance: entity extraction from case reports, medical coding, literature surveillance, and narrative summarization. The attention visualizations from these models provide multi-layered insights—showing not just which words matter, but how different attention heads capture distinct semantic relationships within the text.

Limitations exist, however. Research has shown that attention weights do not always provide faithful explanations of model behavior. High attention weights indicate what the model focused on, but not necessarily what caused the prediction. Models can attend to irrelevant features while making decisions based on other factors. Additionally, attention mechanisms typically explain intermediate processing steps rather than the complete causal chain from input to output.

Insight: In pharmacovigilance practice, combining multiple XAI techniques often provides the most robust understanding. Use attention mechanisms for rapid insight during text processing, LIME for quick case-level explanations, and SHAP for comprehensive validation and global model understanding.

Implementing XAI techniques addresses only part of the trust equation in pharmacovigilance. Translating technical explanations into meaningful transparency requires careful attention to regulatory requirements, organizational workflows, and user needs.

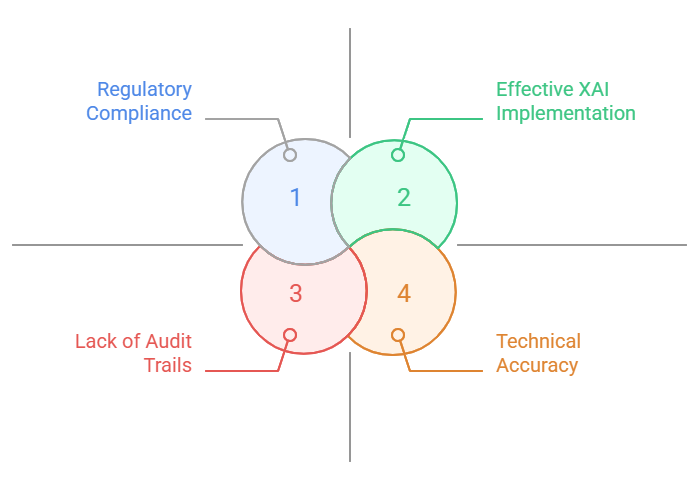

Regulatory frameworks increasingly mandate not just accuracy metrics but also evidence that AI systems make decisions for scientifically valid reasons. The FDA’s proposed guidance on AI in drug and biological product development emphasizes the importance of understanding model behavior, identifying potential biases, and maintaining human oversight. The EMA’s reflection paper on AI in medicines regulation similarly stresses transparency, traceability, and the ability to explain individual decisions.

Documentation practices must evolve to meet these expectations. Validation reports should include not only performance statistics but also systematic analyses of model reasoning patterns. Are the features driving predictions clinically plausible? Does the model rely on spurious correlations or artifacts in training data? Do explanations remain consistent across different patient populations and adverse event types?

XAI enables this deeper validation by making model reasoning accessible to pharmacovigilance experts who can assess clinical validity. When SHAP analysis reveals that an adverse event severity model heavily weights laboratory values, clinical experts can verify whether those laboratory markers are actually predictive of severity in medical literature. When attention visualizations show a text classifier focusing on temporal expressions like “onset within hours,” domain experts can confirm this aligns with known adverse event timelines.

User interface design plays a critical role in making XAI practically useful. Raw SHAP values or attention heatmaps are not inherently intuitive to safety professionals without data science backgrounds. Effective implementations translate technical explanations into domain-relevant formats: highlighting key terms in case narratives, presenting feature contributions as natural language explanations, or visualizing decision boundaries in clinically meaningful ways.

Training and change management are equally essential. Safety teams need education not just in how to interpret XAI outputs, but in understanding the capabilities and limitations of these explanations. XAI does not eliminate the need for expert judgment—it augments it by making AI reasoning transparent enough for experts to apply their knowledge effectively.

Audit trail requirements also shape XAI implementation. Regulatory inspections may require demonstrating why an AI system made specific classifications months or years after the fact. This necessitates archiving not just predictions but also explanations, model versions, and training data characteristics. Some organizations implement explanation logging systems that capture SHAP values or attention weights for every AI-assisted decision, ensuring retrospective auditability.

The trust-building process extends beyond regulatory compliance to organizational culture. When safety professionals see that AI explanations align with their expertise, flag the same risk factors they would identify manually, and highlight cases that genuinely warrant attention, confidence grows organically. Conversely, when explanations reveal concerning patterns—models relying on report submission dates rather than clinical content, for example—XAI enables course correction before deployment.

XAI is being deployed across diverse pharmacovigilance functions, demonstrating tangible value in operational settings.

Adverse event case processing represents a primary application area. Large pharmaceutical companies receive thousands of spontaneous reports requiring triage, classification, and medical review. AI systems augmented with XAI can automatically classify case seriousness while highlighting which report elements drove the classification. When a model classifies a case as “serious” based on hospitalization information and specific adverse events, LIME explanations can show safety reviewers exactly which narrative passages triggered this determination, enabling rapid verification.

Signal detection workflows benefit substantially from explainable models. Traditional disproportionality analysis produces statistical signals—unexpected associations between drugs and adverse events—but offers limited mechanistic insight. Modern AI approaches incorporating natural language processing can identify emerging signals from unstructured text sources like social media, medical literature, and clinician notes. XAI techniques reveal which specific reports, temporal patterns, or co-reported events contributed to signal generation, supporting the critical transition from statistical anomaly to evidence-based safety concern.

One major pharmaceutical manufacturer implemented SHAP-based explanations in their automated signal prioritization system. The system processes thousands of potential signals monthly, ranking them by predicted clinical significance. SHAP values expose which factors—event severity, biological plausibility, temporal relationships, patient vulnerability—most influenced each ranking. This transparency allowed safety committees to trust the prioritization, reducing manual review burden by 40% while increasing confidence that genuinely concerning signals received appropriate attention.

Causality assessment, perhaps pharmacovigilance’s most judgment-intensive task, is also being augmented with XAI. Models trained on historical causality decisions can suggest assessments for new cases. Attention-based architectures processing case narratives highlight the medical history elements, concomitant medications, and temporal sequences that influenced the prediction. Rather than replacing expert causality assessment, these systems provide structured evidence summaries that safety physicians can review and critique, accelerating analysis while maintaining human accountability.

Literature surveillance applications demonstrate XAI’s value in managing information overload. AI systems monitoring medical publications for safety-relevant findings can process thousands of articles daily, far exceeding human capacity. When a model flags an article as describing a novel adverse event, attention mechanisms show which specific sentences or findings triggered the alert. Safety scientists can immediately focus on the pertinent information rather than reading entire papers, dramatically improving surveillance efficiency.

Regulatory reporting is another frontier. Some organizations are exploring AI-assisted generation of safety narratives for regulatory submissions. XAI helps ensure these narratives accurately reflect case information by showing which data elements the generation model emphasized. This transparency is essential for maintaining the scientific integrity and legal defensibility of regulatory communications.

Real-world evidence integration increasingly relies on XAI. When analyzing electronic health records or claims databases to identify safety signals, explainable models can reveal whether predictions rely on clinically meaningful patterns or dataset artifacts. If a model identifies a drug-outcome association primarily based on billing codes known to be unreliable proxies for actual diagnoses, XAI can expose this weakness before flawed signals reach regulatory authorities.

Despite significant progress, XAI in pharmacovigilance faces ongoing challenges that will shape future development and adoption.

Explanation fidelity remains a fundamental concern. XAI techniques provide approximations of model behavior, not perfect representations of internal computations. LIME explanations can vary based on perturbation strategies, SHAP approximations may not capture complex feature interactions, and attention weights do not always reflect true causal relationships. Safety decisions based on potentially imprecise explanations carry risks. The field needs rigorous methods for validating explanations themselves—essentially, explainable explanations that demonstrate when and why XAI outputs can be trusted.

Computational costs present practical barriers, particularly for real-time applications. Generating SHAP explanations for complex ensemble models or deep neural networks processing high-dimensional data can require significant computation time. While approximation methods reduce this burden, they introduce accuracy tradeoffs. Organizations must balance explanation quality against operational efficiency, and current XAI tooling does not always provide clear guidance on this tradeoff.

The explanation-accuracy dilemma persists: simpler, more interpretable models often underperform complex black-box alternatives. In pharmacovigilance, where prediction accuracy directly impacts patient safety, accepting lower accuracy for better interpretability is not always defensible. The goal is not to replace powerful models with interpretable ones, but to make powerful models interpretable—a technically challenging objective that remains incompletely solved.

Standardization across the industry is lacking. Different organizations implement XAI using different techniques, thresholds, and presentation formats. This heterogeneity complicates regulatory review, inter-organizational collaboration, and knowledge sharing. The development of industry standards—perhaps through initiatives like the ICH or consortia like the Observational Health Data Sciences and Informatics (OHDSI) collaborative—would accelerate adoption and ensure baseline quality.

Human factors research in XAI application to pharmacovigilance is nascent. We understand relatively little about how safety professionals actually use explanations in practice, which explanation formats are most effective for different tasks, or how to prevent overreliance on AI when explanations appear superficially plausible. Cognitive science research on expert decision-making combined with XAI could yield insights into optimal human-AI collaboration patterns.

The regulatory landscape continues to evolve. While agencies recognize the importance of explainability, specific requirements remain somewhat ambiguous. How much explainability is sufficient? What validation evidence for explanations must accompany AI system submissions? How should organizations handle cases where models and explanations conflict with expert judgment? Clearer regulatory guidance would facilitate compliant implementation.

Adversarial considerations are emerging. As XAI becomes a gatekeeper for AI deployment, sophisticated actors might manipulate models to produce plausible-looking explanations while maintaining problematic behavior. Ensuring that XAI cannot be gamed—that explanations faithfully represent decision-making rather than serving as interpretability theater—requires ongoing research in robust and trustworthy AI.

Looking forward, several developments will shape XAI’s trajectory in pharmacovigilance. Causal AI approaches that explicitly model causal relationships rather than purely statistical associations may provide more scientifically meaningful explanations. Explanations grounded in pharmacological mechanisms and clinical knowledge graphs could bridge the gap between statistical patterns and medical understanding. Interactive XAI systems allowing safety professionals to probe models with counterfactual questions—”What if the patient had been older?” “What if the medication had been discontinued earlier?”—would support deeper investigation of model reasoning.

Pro Tip: Invest in building organizational competency in both using and critically evaluating XAI. The ability to question whether an explanation makes sense, recognize when explanations might be misleading, and effectively integrate AI insights with domain expertise will differentiate leaders in AI-enabled pharmacovigilance.

Explainable AI is not merely a technical feature of advanced pharmacovigilance systems—it is the foundation upon which trust, regulatory compliance, and responsible automation rest. As AI capabilities expand across drug safety functions, from routine case processing to complex signal evaluation, the ability to understand, validate, and audit algorithmic decisions becomes increasingly critical.

The techniques discussed—SHAP, LIME, attention mechanisms, and emerging approaches—provide practical tools for making AI transparent. Yet technology alone is insufficient. Building trust requires integrating XAI into validation workflows, designing user interfaces that make explanations actionable for domain experts, establishing audit trails that ensure accountability, and fostering organizational cultures that value transparency alongside accuracy.

The pharmacovigilance community stands at an inflection point. Organizations that view explainability as a regulatory checkbox to satisfy will struggle with implementation and fail to capture the full value of AI. Those that recognize XAI as enabling genuine collaboration between human expertise and machine intelligence—where each augments the other’s capabilities—will lead the next generation of drug safety practice.