Human-in-the-Loop (HITL) AI represents a paradigm where artificial intelligence systems operate in tandem with human expertise, requiring validation, correction, or guidance at critical decision points. In pharmacovigilance (PV), where patient safety and regulatory compliance converge, HITL models offer a balanced approach that leverages machine efficiency while maintaining the irreplaceable judgment of qualified safety professionals.

The relevance of HITL in pharmacovigilance has never been more pronounced. The global adverse event reporting landscape processes over 20 million Individual Case Safety Reports (ICSRs) annually, with the FDA’s FAERS database alone receiving approximately 2 million reports per year. Traditional manual processing methods struggle with this volume, often resulting in case backlogs and delayed signal detection.

Industry evidence demonstrates compelling efficiency gains from HITL implementations:

These quantitative improvements position HITL as not merely a technological upgrade but a strategic imperative for modern pharmacovigilance operations seeking to balance automation benefits with regulatory expectations and patient safety commitments.

Why HITL Matters: Mitigating AI Limitations Through Expert Validation

The pharmaceutical industry’s cautious approach to full automation in pharmacovigilance stems from legitimate concerns about AI limitations in safety-critical contexts. HITL architectures directly address these concerns by strategically positioning human expertise where it matters most.

AI models, despite sophisticated natural language processing capabilities, face inherent challenges in PV contexts:

Case Triage Complexity: Determining whether an adverse event report meets regulatory criteria for a valid ICSR requires nuanced judgment. AI systems may misclassify borderline cases—such as reports with vague symptom descriptions or unclear temporal relationships—leading to false negatives that could miss genuine safety signals or false positives that overwhelm review queues.

Signal Detection Sensitivity: While AI excels at identifying statistical patterns, distinguishing true safety signals from confounding variables demands contextual medical knowledge. An algorithm might flag increased reporting of headaches with a medication without recognizing that a recent news article caused heightened awareness rather than representing a genuine increased risk.

Medical Coding Ambiguity: Converting free-text adverse event descriptions into standardized MedDRA terms involves semantic interpretation. Terms like “feeling funny” or “not right” require human judgment to accurately code as specific adverse events.

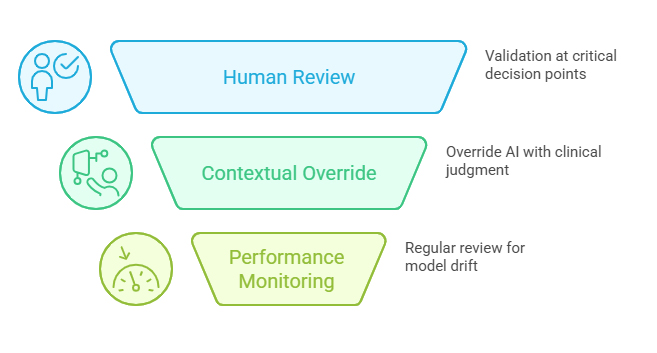

HITL models mitigate these limitations through strategic validation checkpoints:

Quality Gates at Decision Points: Human reviewers validate AI-generated assessments at critical junctures—confirming case validity, approving medical coding, and endorsing causality classifications. This creates multiple safety nets against algorithmic errors propagating through the workflow.

Contextual Override Capability: Safety professionals can override AI recommendations when clinical judgment or product-specific knowledge suggests alternative interpretations, ensuring that automation never supersedes medical expertise in ambiguous situations.

Continuous Performance Monitoring: Regular human review of AI outputs enables real-time detection of model drift, emerging error patterns, or changing data characteristics that might degrade automated performance.

Regulatory Confidence: Health authorities increasingly recognize HITL approaches as demonstrating appropriate AI governance, with human accountability clearly maintained throughout the safety reporting lifecycle.

The synergy between AI efficiency and human judgment transforms potential automation risks into a robust, validated workflow that satisfies both operational and regulatory requirements.

HITL architectures deliver value across multiple PV workflows, with implementation strategies tailored to each use case’s specific requirements:

AI Role: Automated systems extract structured data from diverse source documents (physician reports, patient narratives, literature articles), populate case forms, and suggest medical coding.

Human Validation Points: Safety professionals review and approve: (1) extracted patient demographics and event details for completeness, (2) AI-generated MedDRA coding selections, and (3) seriousness and expectedness classifications before regulatory submission.

Quantitative Impact: Organizations report reducing case intake time from 45 minutes (fully manual) to 12 minutes (HITL), while maintaining 95%+ data accuracy—a 73% efficiency gain without compromising quality.

AI Role: Algorithms continuously analyze safety databases using disproportionality analysis (ROR, PRR), Bayesian methods, and temporal pattern recognition to identify potential safety signals among thousands of drug-event combinations.

Human Validation Points: Medical reviewers assess flagged signals for clinical plausibility, filter noise from true risks using product knowledge and mechanism of action, and prioritize investigations based on public health impact.

Quantitative Impact: HITL signal detection reduces false positive rates by 60-70% compared to purely statistical approaches, enabling safety teams to focus investigation resources on clinically meaningful signals rather than algorithmic artifacts.

AI Role: Natural language processing scans medical literature databases to identify publications containing adverse event information, then extracts case details for potential ICSR creation.

Human Validation Points: Safety professionals determine whether identified literature cases meet regulatory reporting criteria, resolve ambiguous case information, and ensure appropriate duplicate detection.

Quantitative Impact: HITL literature surveillance systems screen 10-15 times more publications than manual processes while maintaining case identification sensitivity above 93%, significantly reducing the risk of missing reportable cases.

AI Role: Machine learning models trained on historical coding patterns suggest appropriate MedDRA Preferred Terms (PTs) and System Organ Classes (SOCs) for adverse event descriptions.

Human Validation Points: Certified medical coders review suggested terms, applying clinical judgment for ambiguous descriptions and ensuring consistency with company coding conventions.

Quantitative Impact: HITL coding assistance improves coder productivity by 40-50% while reducing intercoder variability and enhancing standardization across global safety operations.

AI Role: Algorithms compare incoming reports against existing database entries using fuzzy matching on patient characteristics, event descriptions, and reporter details to identify potential duplicates.

Human Validation Points: Safety professionals make the final determination on suspected duplicates, particularly for complex scenarios involving follow-up information or partial data matches.

Quantitative Impact: HITL duplicate detection achieves 91-95% sensitivity while reducing false duplicate flags by 50% compared to rule-based systems, preventing both duplicate reporting and inappropriate case linking.

These applications demonstrate that HITL’s value lies not in universal automation but in strategic human involvement at decision points where expertise, judgment, and accountability matter most.

System Design Considerations: Building Robust HITL Infrastructure

Implementing effective HITL AI systems in pharmacovigilance requires thoughtful architectural decisions that balance automation efficiency with regulatory requirements and long-term sustainability.

Feedback Loop Architecture: Well-designed HITL systems capture human corrections, overrides, and validation decisions as training data for model refinement. When a safety professional changes an AI-suggested MedDRA code or reclassifies a case, the system should log this feedback to identify patterns in model errors.

Active Learning Strategies: Implementing active learning allows AI systems to identify cases where they have low confidence and prioritize those for human review, creating efficient learning cycles. Cases where the model is uncertain provide the most valuable training signal when validated by experts.

Version Control and A/B Testing: Maintaining rigorous version control of AI models and conducting controlled A/B tests of model updates ensures that improvements are validated before full deployment. This prevents regression in model performance and provides evidence-based justification for changes.

Domain Adaptation: As new products enter the portfolio or safety information evolves, HITL systems must adapt. Building mechanisms for rapid fine-tuning on product-specific case patterns enables models to maintain performance as the PV environment changes.

Transparent Decision Trails: Every AI recommendation and human validation must be timestamped and attributed. Audit trails should capture what the AI suggested, what the human decided, the rationale for any changes, and the qualified professional’s credentials.

Explainable AI Integration: Regulators increasingly expect explanations for AI-driven decisions. Systems should provide interpretable rationale for classifications—showing which text passages influenced coding suggestions or which factors drove seriousness assessments.

Validation Documentation: Regulatory submissions require evidence that AI components are appropriately validated. HITL systems should maintain ongoing performance metrics (accuracy, precision, recall) across case types, with documented validation protocols and acceptance criteria.

Right to Audit Considerations: Third-party AI vendors must provide sufficient system transparency to support regulatory audits. Organizations should negotiate contractual rights to access model performance data and validation evidence when using external AI tools.

Cognitive Load Management: HITL interfaces should present AI suggestions in ways that support rather than overwhelm human reviewers. Highlighting the most critical validation points, using visual confidence indicators, and providing context-appropriate information density reduces reviewer fatigue.

Validation Efficiency: The system should make validation quick and intuitive—using keyboard shortcuts, smart defaults, and streamlined approval workflows. If validation becomes tedious, safety professionals may develop workaround behaviors that undermine quality.

Role-Based Validation: Different validation checkpoints may require different expertise levels. Configurable workflows that route cases to appropriate reviewers based on complexity ensure efficient resource allocation while maintaining quality standards.

Training and Change Management: Successful HITL adoption requires comprehensive training programs that help safety professionals understand AI capabilities and limitations, fostering appropriate trust calibration rather than over-reliance or excessive skepticism.

Statistical Process Control: Implementing ongoing monitoring of AI performance metrics (accuracy rates, false positive/negative trends, processing times) enables early detection of model degradation or data quality issues before they impact patient safety.

Human Validator Performance: HITL systems should also monitor human performance—tracking validation times, override patterns, and consistency among reviewers to identify training needs or process improvement opportunities.

Escalation Protocols: Clear protocols for handling edge cases, system failures, or situations where AI confidence is very low ensure that safety-critical decisions never occur without appropriate oversight, regardless of automation level.

These design considerations transform HITL from a simple “AI plus human review” concept into a sophisticated, validated system that meets the pharmaceutical industry’s exacting standards for safety data management.

Conclusion: HITL as the Foundation for Responsible AI in Pharmacovigilance

Human-in-the-Loop AI models represent the optimal path forward for pharmacovigilance automation—capturing the efficiency and scalability of artificial intelligence while preserving the medical judgment, contextual reasoning, and accountability that patient safety demands. The quantitative evidence is compelling: 60-75% processing time reductions, 92-96% accuracy rates, and 40% decreases in data entry errors demonstrate that HITL delivers measurable operational value.

Beyond metrics, HITL architectures align with the broader regulatory and ethical principles governing AI adoption in healthcare. As regulatory agencies worldwide develop AI governance frameworks—from the FDA’s AI/ML guidance to the European Medicines Agency’s reflections on AI use—the consistent theme is appropriate human oversight at critical decision points. HITL inherently satisfies this requirement by design rather than as an afterthought.

The strategic advantages extend beyond compliance. HITL systems build organizational AI competency gradually, allowing safety teams to develop trust in automation, understand AI capabilities and limitations, and evolve governance frameworks through practical experience. This measured approach reduces implementation risk compared to aggressive full-automation strategies that may encounter regulatory resistance or quality issues.

Looking ahead, pharmacovigilance organizations should view HITL not as a compromise between automation and human expertise but as a synergistic model that amplifies both. As AI capabilities advance—incorporating large language models, multimodal data processing, and more sophisticated reasoning—the human validation layer ensures these technologies enhance rather than compromise the fundamental mission of pharmacovigilance: protecting patient safety through rigorous, accountable adverse event monitoring.

Organizations embarking on AI transformation in safety operations should prioritize HITL architectures, invest in the technical infrastructure for continuous learning and auditability, and foster a culture where AI is positioned as a tool that elevates human expertise rather than replaces it. This approach positions pharmacovigilance functions to capture automation’s benefits while maintaining the trust of regulators, healthcare professionals, and most importantly, the patients whose safety depends on these systems.

Frequently Asked Questions

Q: How does HITL AI differ from traditional manual pharmacovigilance processes?

A: HITL AI automates routine data extraction, classification, and pattern recognition tasks while requiring human validation at critical decision points. Unlike fully manual processes where humans perform every step, HITL handles repetitive work algorithmically but ensures medical judgment and accountability remain central. This typically reduces processing time by 60-75% compared to manual workflows.

Q: What level of AI accuracy is required before implementing HITL in production PV workflows?

A: Industry best practice suggests AI components should demonstrate 85-90% accuracy on validation datasets before HITL deployment, with continuous monitoring to maintain 92-96% accuracy post-validation. However, accuracy thresholds should be risk-stratified—higher requirements for critical classifications like seriousness determinations versus more tolerance for initial data extraction where human review will catch errors.

Q: How do regulatory agencies view HITL approaches in pharmacovigilance?

A: Regulatory agencies generally view HITL favorably as it maintains clear human accountability while leveraging technology efficiencies. The FDA, EMA, and other authorities emphasize appropriate validation, transparent audit trails, and qualified person oversight—all inherent to HITL models. Organizations should document AI validation, maintain decision traceability, and ensure procedures clearly define human responsibilities.

Q: Can HITL systems learn from human corrections to improve over time?

A: Yes, well-designed HITL systems capture human validation decisions, corrections, and overrides as feedback data for model refinement. This continuous learning approach enables AI performance to improve as it processes more cases under expert guidance. However, model updates should follow controlled change management processes with revalidation to ensure improvements don’t introduce new errors.

Q: What are the primary challenges in implementing HITL AI for pharmacovigilance?

A: Key challenges include: (1) integrating AI with existing safety databases and workflows, (2) achieving appropriate trust calibration among safety professionals—neither over-reliance nor excessive skepticism, (3) maintaining comprehensive audit trails for regulatory inspection, (4) managing change control as AI models are updated, and (5) ensuring sufficient validation data represents the diversity of real-world case types.

Q: How should organizations measure ROI for HITL AI investments in PV?

A: ROI measurement should encompass multiple dimensions: (1) time savings per case and total processing capacity increase, (2) error rate reductions and quality metric improvements, (3) resource reallocation to higher-value activities like medical review and signal investigation, (4) regulatory inspection outcomes and audit findings, (5) compliance with expedited reporting timelines, and (6) staff satisfaction and retention improvements from reducing tedious manual work.

Q: What happens when the AI component of a HITL system makes an error that the human reviewer misses?

A: This scenario—while uncommon in well-designed HITL systems—is addressed through multiple safeguards: (1) independent QA sampling reviews cases after validation, (2) downstream medical review processes catch errors before significant decisions, (3) ongoing AI performance monitoring detects systematic error patterns, and (4) clear accountability protocols ensure human validators bear responsibility for validated decisions, creating appropriate diligence incentives.

Q: Are HITL models suitable for all types of pharmacovigilance activities?

A: HITL delivers greatest value for high-volume, pattern-based tasks with clear validation criteria—like case intake, medical coding, and literature screening. Activities requiring pure medical judgment (complex causality assessment, benefit-risk evaluation) may use AI as decision support but remain fundamentally human-driven. Strategic deployment focuses HITL where automation can handle routine elements while human expertise validates outputs.