The life sciences industry stands at a pivotal intersection where artificial intelligence promises transformative capabilities in drug discovery, clinical development, manufacturing, and pharmacovigilance, yet operates within one of the most heavily regulated sectors globally. This convergence creates a fundamental tension: the drive to innovate rapidly through AI adoption confronts stringent regulatory requirements designed to protect patient safety, ensure data integrity, and maintain product quality. Responsible AI represents the framework through which life sciences organizations navigate this tension, implementing AI systems that deliver innovation while meeting compliance obligations and ethical standards.

The stakes in life sciences AI deployment exceed those in most other industries. AI systems influence decisions about patient treatment, drug approval, safety signal detection, and manufacturing quality—decisions with direct implications for human health and survival. Errors, biases, or failures in these systems can result in patient harm, regulatory sanctions, product recalls, and erosion of public trust. Simultaneously, overly conservative approaches that delay or avoid beneficial AI adoption impose their own costs: slower drug development, missed safety signals, inefficient operations, and ultimately, delayed patient access to needed therapies.

Responsible AI in life sciences, therefore, requires balancing multiple, sometimes competing imperatives. Organizations must innovate to remain competitive and serve patients effectively while maintaining regulatory compliance across complex, evolving frameworks. They must deploy sophisticated AI technologies while ensuring transparency and explainability to regulators and clinicians. They must leverage AI’s pattern recognition capabilities while preventing biases that disadvantage vulnerable populations. They must move quickly to capture AI’s benefits while implementing rigorous validation and governance that ensure reliability and safety. This balance is neither simple nor static—it requires continuous recalibration as technologies, regulations, and organizational capabilities evolve.

Responsible AI encompasses the principles, practices, and governance frameworks that ensure artificial intelligence systems are developed, validated, deployed, and monitored in ways that are ethical, transparent, accountable, fair, and aligned with societal values and regulatory requirements. In the life sciences context, responsible AI extends beyond generic AI ethics to incorporate domain-specific considerations, including patient safety primacy, regulatory compliance, clinical validity, and alignment with established quality management systems.

The concept of responsible AI addresses fundamental concerns that arise when autonomous or semi-autonomous systems make or influence consequential decisions. AI systems can perpetuate or amplify biases present in training data, disadvantaging certain populations in ways that violate ethical principles and potentially regulatory requirements. The complexity of many AI models creates opacity where decision rationale cannot be easily understood or verified, creating challenges for accountability and regulatory scrutiny. AI systems may perform differently across populations, contexts, or time periods than observed during development and validation, introducing risks that emerge only after deployment. The concentration of AI development expertise in technology companies rather than regulated industries creates knowledge gaps about regulatory requirements, quality standards, and domain-specific risks.

Responsible AI frameworks address these concerns through structured approaches that integrate throughout the AI lifecycle. During development, responsible AI principles guide data collection and curation to ensure representativeness and mitigate bias, algorithm design and training to prioritize transparency and fairness, validation methodologies that assess performance across diverse populations and scenarios, and documentation practices that create transparency and enable external scrutiny. During deployment, responsible AI frameworks establish governance structures defining roles, responsibilities, and decision authorities for AI systems, monitoring systems that detect performance degradation, bias emergence, or safety concerns, human oversight mechanisms that preserve appropriate human judgment in consequential decisions, and continuous improvement processes that refine AI systems based on operational experience.

In life sciences, responsible AI frameworks must align with existing quality management systems, regulatory frameworks, and industry standards. This alignment distinguishes responsible AI in regulated industries from responsible AI in consumer technology or other less regulated sectors. Life sciences organizations cannot simply adopt generic responsible AI principles—they must integrate these principles with GxP requirements, validation expectations, audit readiness standards, and the primacy of patient safety that characterizes pharmaceutical and medical device regulation.

The business case for responsible AI extends beyond compliance to competitive advantage. Organizations with mature responsible AI practices mitigate regulatory risk, reducing the likelihood of warning letters, consent decrees, or product delays due to AI-related deficiencies. They build stakeholder trust among regulators, healthcare providers, patients, and investors by demonstrating commitment to ethical and safe AI deployment. They achieve operational efficiency through systematic AI governance that prevents costly remediation of poorly designed or inadequately validated systems. They enable innovation by creating clear pathways for AI deployment that balance experimentation with appropriate risk management. Ultimately, responsible AI transforms from a constraint on innovation into an enabler of sustainable, scalable, compliant AI adoption.

Pro Tip: Frame responsible AI not as a compliance burden but as a quality imperative—just as Good Manufacturing Practices ensure product quality, responsible AI practices ensure AI system quality, reliability, and fitness for purpose in patient safety-critical applications.

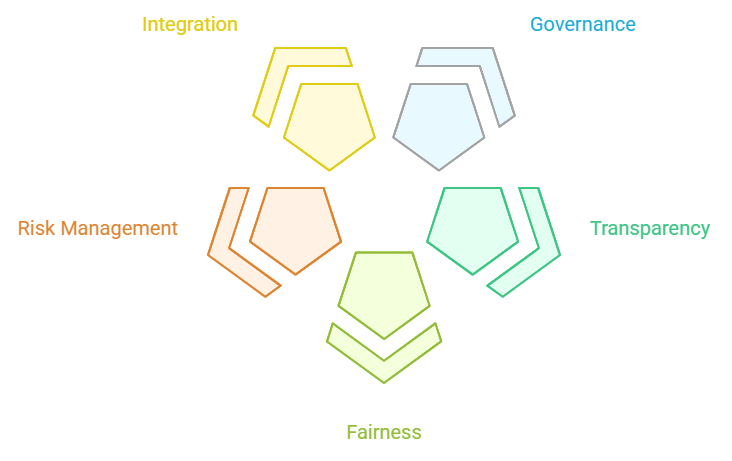

Three foundational principles—governance, transparency, and fairness—form the core of responsible AI frameworks in life sciences. These principles operationalize abstract ethical commitments into concrete practices that organizations can implement, assess, and demonstrate to internal stakeholders and external regulators.

AI governance establishes the organizational structures, policies, processes, and accountabilities that ensure AI systems are developed and deployed consistently with organizational values, regulatory requirements, and responsible AI principles. Effective governance addresses the reality that AI systems span multiple organizational functions—data science, IT, quality assurance, regulatory affairs, medical affairs, pharmacovigilance—requiring coordination mechanisms that transcend traditional departmental boundaries.

Governance structures typically include AI oversight committees or boards with cross-functional membership and defined decision authorities for approving AI projects, reviewing validation results, authorizing deployment, and overseeing ongoing monitoring. These oversight bodies establish risk-based approval pathways where higher-risk AI applications receive more intensive scrutiny than lower-risk applications. Clear escalation procedures define when issues identified during development, validation, or operation require elevated review and decision-making.

Policies and standard operating procedures operationalize governance by defining requirements for AI system development, validation, documentation, deployment, monitoring, and maintenance. These policies should integrate with existing quality management systems rather than creating parallel governance structures. For example, AI system changes should flow through established change control processes, AI validation should align with existing validation frameworks, and AI quality issues should enter standard deviation and CAPA systems.

Role definitions clarify responsibilities across the AI lifecycle. Who is accountable for AI system performance? Who has the authority to override AI recommendations? Who is responsible for monitoring AI systems post-deployment? Who makes decisions about when to retrain, recalibrate, or retire AI systems? These accountability assignments prevent diffusion of responsibility where everyone assumes someone else is monitoring AI performance or responding to issues.

Risk management frameworks guide governance decisions by classifying AI systems according to potential patient safety impact, regulatory risk, and operational criticality. Higher-risk systems warrant more stringent governance, more comprehensive validation, more intensive monitoring, and stronger human oversight. This risk-based approach enables organizations to allocate governance resources efficiently while ensuring that the most critical applications receive appropriate attention.

Transparency in AI systems encompasses multiple dimensions including algorithmic transparency, where the logic and decision processes of AI models can be understood; data transparency, where training data characteristics, quality, and limitations are documented; performance transparency, where system capabilities, limitations, and validation results are clearly communicated; and decision transparency, where the rationale for specific AI outputs can be explained to users and regulators.

Documentation practices create the foundation for transparency. Comprehensive documentation should describe the intended use and scope of the AI system, including populations, contexts, and decisions it is designed to support; data sources, including provenance, representativeness, quality assessment, and preprocessing applied; algorithm architecture, training methodology, and hyperparameters; validation approach, test datasets, performance metrics, and identified limitations; deployment environment, integration points, and operational constraints; and monitoring plan, including performance metrics tracked, alert thresholds, and response procedures.

Explainability techniques enhance transparency by providing insight into how AI systems arrive at specific outputs. For simpler models like decision trees or linear models, the decision logic may be directly interpretable. For complex models like deep neural networks, explainability techniques, including feature importance analysis, attention visualization, counterfactual explanations, and local interpretable model-agnostic explanations (LIME), provide post-hoc interpretability. The appropriate explainability approach depends on the use case, user needs, and regulatory expectations.

Communication strategies tailor transparency to different stakeholders. Regulators require comprehensive technical documentation supporting validation claims and ongoing performance monitoring. Healthcare providers need practical information about what the AI system does, when to trust its outputs, and when human judgment should override AI recommendations. Patients deserve clear communication about when AI influences their care and what safeguards protect their interests. Internal stakeholders, including executives, quality assurance, and medical affairs, require transparency appropriate to their governance and oversight roles.

Transparency limitations must be acknowledged honestly. Some aspects of complex AI models remain difficult to explain fully, even with advanced techniques. Proprietary algorithms from vendors may limit the transparency available to deploying organizations. Organizations should document these transparency limitations and implement compensating controls such as more intensive validation, more frequent monitoring, or stronger human oversight when full transparency cannot be achieved.

Fairness in AI systems requires that performance, accuracy, and benefits are equitably distributed across populations and that systems do not systematically disadvantage individuals based on protected characteristics such as race, ethnicity, gender, age, or socioeconomic status. In life sciences, fairness considerations extend beyond legal compliance to ethical imperatives rooted in medicine’s fundamental commitment to equitable care and public health principles of health equity.

Bias assessment throughout the AI lifecycle identifies potential fairness issues. Training data bias assessment examines whether datasets adequately represent diverse populations or overrepresent certain groups while underrepresenting others. Algorithmic bias assessment evaluates whether AI models perform differently across demographic subgroups, even when trained on representative data. Outcome bias assessment examines whether AI deployment results in differential access, treatment, or outcomes across populations in real-world operation.

Fairness metrics quantify equity across populations. These may include demographic parity, examining whether positive predictions occur at similar rates across groups; equalized odds, assessing whether true positive and false positive rates are similar across groups; and calibration, evaluating whether predicted probabilities accurately reflect actual outcome rates within each group. Different fairness metrics may conflict—achieving demographic parity may compromise equalized odds—requiring organizations to make explicit value judgments about which fairness definitions best serve their context and patient populations.

Mitigation strategies address identified biases through multiple approaches. Data-level interventions include collecting additional data from underrepresented populations, re-weighting training samples to balance representation, and synthetic data generation using techniques that preserve privacy while enhancing representativeness. Algorithm-level interventions incorporate fairness constraints into model training, adjust decision thresholds differently across groups, or use fairness-aware algorithms designed to optimize both performance and equity. Post-processing interventions calibrate outputs differently for different groups or apply corrections that equalize performance metrics across populations.

Fairness governance establishes organizational processes for identifying, assessing, and addressing AI fairness concerns. This includes equity representation in AI governance bodies, fairness review as a standard component of AI validation, ongoing fairness monitoring in operational deployment, and stakeholder engagement with affected communities to understand fairness concerns and priorities. As discussed in AI in Pharmacovigilance: Knowing When to Say No, organizations must be prepared to decline deploying AI systems when fairness issues cannot be adequately addressed, even if technical performance appears adequate in aggregate.

The regulatory landscape for AI in life sciences encompasses multiple frameworks, including Good Practices (GxP) across manufacturing, clinical, laboratory, and distribution operations; electronic records and signatures requirements under 21 CFR Part 11 and equivalent regulations; ICH guidelines addressing quality, safety, and efficacy across the pharmaceutical lifecycle; and emerging AI-specific guidance from regulatory authorities. Successful AI deployment requires understanding and aligning with this complex regulatory mosaic.

Good Automated Manufacturing Practice (GAMP) principles provide foundational guidance for computerized system validation that extends to AI systems. GAMP categorizes software by complexity and risk, with AI systems typically falling into Category 5 (custom/configured systems), requiring the most comprehensive validation. However, AI’s learning capability and potential for performance drift create challenges for traditional validation approaches predicated on deterministic system behavior. Organizations must adapt GAMP principles to AI characteristics, validating not just initial performance but also robustness across data distributions, stability over time, and appropriateness of monitoring and retraining procedures.

21 CFR Part 11 establishes requirements for electronic records and electronic signatures in FDA-regulated processes. When AI systems generate, modify, or analyze electronic records used in regulatory submissions or GxP processes, Part 11 requirements apply. This includes validation of AI systems to ensure accuracy, reliability, and consistent intended performance; audit trails capturing AI system actions, including predictions, recommendations, and configuration changes; controls restricting system access to authorized individuals; and electronic signature provisions for actions requiring human review or approval. Organizations must carefully map which AI applications create or influence electronic records within the Part 11 scope and ensure appropriate controls.

ICH guidelines increasingly address digital technologies and AI, though explicit AI-specific guidance remains limited. ICH E6(R3) for Good Clinical Practice acknowledges computerized systems and establishes principles for validation and data integrity. ICH E19 on optimization of safety data collection discusses using technology, including AI, to improve safety data capture and processing. ICH Q9 on Quality Risk Management provides frameworks for assessing and mitigating AI-related risks. Organizations should apply existing ICH principles to AI while monitoring for emerging specific guidance.

Regulatory agencies have begun issuing AI-specific guidance, though the landscape remains evolving. The FDA’s guidance on artificial intelligence and machine learning in medical devices establishes principles, including defining intended use and scope, ensuring representative training data, implementing performance monitoring plans, and providing transparency through documentation. While focused on medical devices, these principles inform regulatory thinking across FDA-regulated products. The EMA has published reflection papers on AI use in regulatory submissions and established the AI Task Force to coordinate AI policy and provide guidance. The Latest EMA, FDA, and ICH Guidelines Regarding Use of AI in Pharmacovigilance discussion provides a detailed examination of pharmacovigilance-specific regulatory expectations.

Data integrity principles—the ALCOA++ principles of Attributable, Legible, Contemporaneous, Original, Accurate, plus Complete, Consistent, Enduring, and Available—apply to AI systems just as they do to other GxP systems. AI training data, validation data, operational input data, and AI outputs must meet these integrity standards. This requires data provenance tracking, version control, protection against unauthorized modification, and appropriate retention. AI introduces specific integrity challenges, including model versioning, training data lineage, and ensuring AI outputs are appropriately attributable to specific model versions and input data.

Validation documentation for AI systems must address specific considerations beyond traditional software validation. This includes detailed data characterization describing training, validation, and test datasets with representativeness assessment; performance metrics across relevant dimensions including overall accuracy, performance by subgroup, edge case behavior, and bias metrics; intended use and limitations clearly defining appropriate applications, populations, and contexts, along with known limitations and inappropriate uses; monitoring plan specifying metrics tracked, alert thresholds, monitoring frequency, and response procedures; and change control procedures defining what modifications require revalidation versus lighter verification.

Regulatory inspection readiness for AI systems requires preparing to demonstrate validation rigor, ongoing monitoring, data integrity, and appropriate quality oversight. Inspectors increasingly examine computerized systems, and AI will receive scrutiny. Organizations should maintain readily accessible validation documentation, performance monitoring records, deviation and CAPA documentation related to AI systems, and training records demonstrating personnel competency with AI systems they use or oversee. Mock inspections focusing on AI systems help identify documentation gaps and prepare personnel for inspector questions.

Insight: Regulatory frameworks for AI in life sciences will continue evolving rapidly. Organizations should establish regulatory intelligence functions, monitor guidance updates, and participate in industry working groups to influence emerging standards while ensuring ongoing compliance.

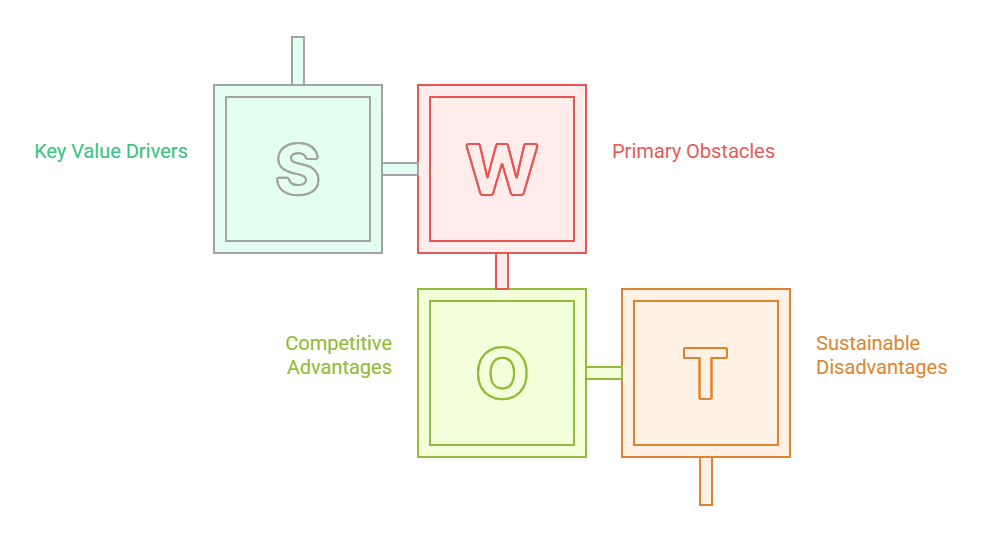

Life sciences organizations implementing responsible AI face distinctive challenges stemming from industry characteristics, regulatory constraints, and AI technology limitations. However, these same factors create opportunities for organizations that successfully navigate responsible AI implementation to achieve competitive advantages and operational benefits.

The talent gap represents a fundamental challenge, as responsible AI requires personnel combining rare expertise in data science and machine learning, a deep understanding of life sciences domain knowledge, familiarity with regulatory frameworks and quality systems, and experience implementing governance and compliance programs. Few individuals possess all these competencies, requiring organizations to build cross-functional teams and develop training programs that bridge knowledge gaps. The competition for AI talent intensifies the challenge, as technology companies often offer more attractive compensation and work environments than pharmaceutical companies constrained by traditional corporate cultures and bureaucracies.

Legacy system integration creates technical challenges when implementing AI solutions. Life sciences organizations operate complex landscapes of established systems for clinical data management, pharmacovigilance, manufacturing, quality, and regulatory submissions. AI systems must integrate with these legacy environments, often requiring custom interfaces, data transformation, and workflow adaptation. Legacy systems may lack modern APIs, operate on outdated technology stacks, or contain data in formats challenging for AI consumption. The long lifecycles of validated GxP systems mean wholesale replacement is often impractical, necessitating integration strategies that work within existing constraints.

Risk aversion embedded in pharmaceutical culture, while essential for patient safety, can become excessive when evaluating AI adoption. Organizations may focus disproportionately on AI risks while underweighting risks of not adopting AI, including competitive disadvantage, operational inefficiency, and missed opportunities to enhance patient safety through better safety surveillance. Balancing appropriate caution with innovation requires leadership that understands both AI capabilities and limitations, governance frameworks that enable risk-based decision-making, and success stories demonstrating AI value within the organization to build confidence.

Validation complexity and cost increase significantly for AI systems compared to traditional software due to performance dependence on training data quality and representativeness, the need to validate across diverse populations and edge cases, requirements for explainability and transparency, ongoing monitoring and potential revalidation as systems drift, and regulatory uncertainty about validation expectations. These factors increase development timelines and costs, potentially making AI adoption less attractive from short-term financial perspectives. However, they also create opportunities for organizations to develop efficient, scalable validation approaches that can be leveraged across multiple AI applications.

Data availability and quality challenges constrain AI development, as effective AI systems require large, diverse, high-quality datasets that may not exist within a single organization. Data may be siloed across business units, in formats incompatible with AI tools, lacking standardization, or protected by privacy constraints limiting use. Organizations addressing these challenges through data governance programs, data quality improvement initiatives, strategic data partnerships, and investment in data infrastructure create competitive advantages in AI development capabilities.

Opportunities emerging from responsible AI implementation include operational efficiency gains through automation of repetitive tasks, freeing human resources for higher-value activities requiring judgment, creativity, and interpersonal skills. AI systems can process vast data volumes impossible for human review, enabling more comprehensive safety surveillance, more efficient clinical trial monitoring, and more thorough quality assessments. Enhanced decision quality results from AI providing consistent, evidence-based recommendations that augment human judgment while reducing variability from fatigue, cognitive biases, or knowledge gaps.

Competitive differentiation accrues to organizations demonstrating responsible AI leadership through faster drug development timelines, more efficient operations, better safety surveillance, and stronger regulatory relationships. First movers establishing responsible AI capabilities may achieve advantages difficult for competitors to replicate, given the time required to develop expertise, infrastructure, and organizational capabilities. Early engagement with regulators on responsible AI approaches may influence emerging guidance, creating frameworks aligned with organizational capabilities.

Patient outcomes improvement represents the ultimate opportunity and justification for responsible AI adoption. AI systems can detect safety signals earlier, potentially preventing patient harm through faster intervention. They can identify patient populations most likely to benefit from specific therapies, enabling more precise prescribing. They can optimize clinical trial designs, accelerating beneficial therapy development. When implemented responsibly with appropriate governance, validation, and monitoring, AI serves the pharmaceutical industry’s fundamental mission: improving patient health outcomes through safe, effective therapies.

Achieving the balance between AI innovation and regulatory compliance requires deliberate strategies that neither sacrifice innovation to excessive caution nor compromise compliance and patient safety in pursuit of competitive advantage. Leading life sciences organizations are implementing frameworks that enable responsible AI adoption at scale while maintaining the quality, safety, and regulatory standards essential to the industry.

Start with high-value, lower-risk applications that demonstrate AI value while building organizational capability and confidence. Applications such as automating literature review for safety surveillance, enhancing document processing for regulatory submissions, optimizing clinical trial site selection, or improving manufacturing quality control predictions offer substantial value with more manageable risk profiles than applications directly affecting patient treatment decisions. Early successes build momentum, develop expertise, and establish governance frameworks that can extend to higher-risk applications.

Establish clear AI governance frameworks before widespread deployment rather than attempting to impose governance retrospectively on proliferating AI initiatives. Governance frameworks should define AI system risk classification criteria, validation and documentation requirements by risk level, approval authorities for different risk categories, monitoring and maintenance requirements, and change control procedures. Early governance investment prevents technical debt accumulation from poorly validated or inadequately monitored AI systems requiring expensive remediation.

Invest in foundational data infrastructure and governance that enable multiple AI applications rather than custom data solutions for each AI project. Establishing common data models, implementing data quality assessment and improvement programs, creating data cataloging and metadata management, developing privacy-preserving data sharing mechanisms, and building secure data environments for AI development create reusable infrastructure that reduces the incremental cost and timeline for subsequent AI projects while improving data quality across applications.

Develop internal AI expertise through hiring, training, and external partnerships rather than relying exclusively on vendors or outsourcing. While vendors provide valuable technology and services, internal expertise is essential for defining requirements, evaluating vendor claims, overseeing validation, interpreting results, and maintaining systems. Training programs that cross-skill data scientists in regulatory requirements and life sciences domain experts in AI fundamentals create the interdisciplinary teams essential for responsible AI success.

Engage proactively with regulators through pre-submission meetings, participation in regulatory working groups, and transparent communication about AI approaches. Regulatory agencies increasingly encourage early engagement on innovative technologies, enabling organizations to understand regulatory expectations, receive feedback on validation approaches, and potentially influence emerging guidance. Organizations demonstrating commitment to responsible AI through transparency and collaboration build regulatory relationships that facilitate smoother approval processes and clearer compliance pathways.

Implement continuous learning mechanisms that capture lessons from AI projects, share knowledge across the organization, and refine governance approaches based on experience. This includes post-implementation reviews assessing what worked well and what could improve, knowledge management systems capturing AI validation approaches and lessons learned, communities of practice enabling cross-functional collaboration and knowledge sharing, and governance review cycles that update policies and standards based on emerging regulatory guidance, technology capabilities, and organizational experience.

Maintain ethical grounding throughout AI development and deployment by regularly returning to fundamental questions: Does this AI application serve patient interests? Does it respect human dignity and autonomy? Does it distribute benefits and burdens fairly? Does it maintain transparency and accountability? These ethical considerations complement regulatory compliance and provide guideposts when navigating novel situations where regulatory guidance may be limited or ambiguous.

Prepare for the evolving regulatory landscape by building adaptable governance structures and validation approaches. Regulatory frameworks for AI will continue evolving as agencies, industry, and stakeholders gain experience. Organizations with rigid, narrowly tailored compliance programs may face expensive retrofitting as requirements change. Flexible, principles-based approaches aligned with fundamental quality and safety objectives adapt more readily to regulatory evolution while maintaining compliance with current requirements.

Responsible AI in life sciences represents neither a constraint on innovation nor a checkbox compliance exercise, but rather a fundamental approach to ensuring that AI systems deliver their transformative potential while maintaining the quality, safety, and ethical standards essential to pharmaceutical and healthcare industries. The balance between innovation and compliance is not a compromise where each diminishes the other, but a synergy where responsible practices enable sustainable innovation and innovation drives better approaches to responsibility.

The organizations that will lead in life sciences AI are those recognizing that responsible AI requires deliberate investment in governance structures, validation rigor, transparency mechanisms, fairness assessment, and regulatory alignment. These investments create capabilities that compound over time—each successive AI application benefits from established frameworks, learned lessons, and developed expertise. The alternative—reactive, ad hoc approaches to AI governance and validation—creates technical debt, regulatory risk, and ultimately limits the scale and scope of beneficial AI adoption.

The regulatory landscape will continue evolving as agencies develop experience with AI systems, observe outcomes from early adopters, and balance the imperatives of innovation enablement with patient protection. Organizations that engage constructively in this evolution through transparent communication, rigorous validation, and willingness to share learnings contribute to better regulatory frameworks while positioning themselves advantageously within emerging requirements. The path forward requires viewing regulatory engagement not as an adversarial compliance burden but as a collaborative development of standards that serve all stakeholders.