The pharmaceutical industry faces a persistent challenge: how to leverage collective intelligence for drug safety monitoring while respecting stringent data privacy regulations and competitive concerns. Traditional pharmacovigilance relies on centralized databases and isolated datasets, limiting the statistical power needed to detect rare adverse events or population-specific safety signals.

Federated learning offers a paradigm shift. This collaborative AI approach allows multiple organizations to train shared machine learning models without ever exchanging raw patient data. For pharmacovigilance teams managing adverse event detection, signal prioritization, and regulatory reporting, federated learning represents both a technical innovation and a strategic enabler of cross-organizational collaboration.

As explored in “Collaborative AI Platforms: Transforming Global Pharmacovigilance Through Shared Intelligence,” the future of drug safety monitoring depends on breaking down data silos while maintaining privacy safeguards. Federated learning provides the technical foundation for this transformation.

Federated learning is a machine learning technique that trains algorithms across decentralized datasets without requiring data centralization. Instead of moving data to a central server, the model travels to where the data resides. Each participating organization trains the model locally on its proprietary data, then shares only the model updates—typically gradient vectors or weight adjustments—with a central coordinator.

The coordinator aggregates these updates to create an improved global model, which is then redistributed for another training round. This iterative process continues until the model converges to optimal performance. Critically, raw patient records, case narratives, or adverse event reports never leave their originating organization.

Pro Tip: Think of federated learning as collaborative intelligence where the insights travel, not the data. This distinction is fundamental for regulatory discussions and stakeholder buy-in.

Pharmacovigilance depends on detecting safety signals that may only emerge when analyzing large, diverse patient populations. However, individual pharmaceutical companies, regulatory agencies, and healthcare systems operate with fragmented data silos. Privacy regulations like GDPR, HIPAA, and varying national data protection laws create legitimate barriers to data sharing.

Federated learning addresses this tension by enabling collaborative analysis without violating privacy mandates. When a rare adverse event occurs in only one per 10,000 patients, no single organization may have sufficient cases to establish statistical significance. Federated learning allows detection of these signals by leveraging collective datasets while each participant retains complete control over their data.

The business case extends beyond compliance. Pharmaceutical companies can collaborate on post-market surveillance without revealing competitive intelligence about patient demographics, prescribing patterns, or market penetration. Regulators can access more comprehensive safety intelligence without assuming liability for industry data. Healthcare providers can contribute to drug safety research without complex data use agreements.

As detailed in “How Pharma Leaders Are Using AI in Pharmacovigilance to Improve Patient Safety,” advanced AI techniques are already transforming safety monitoring. Federated learning takes this evolution further by making collaborative AI both technically feasible and legally defensible.

The technical architecture of federated learning in pharmacovigilance involves several critical components working in concert to maintain privacy while maximizing model performance.

At the foundation lies a secure aggregation protocol. Participating organizations—pharmaceutical companies, healthcare systems, or regulatory bodies—initialize with a common model architecture. This base model, designed for specific pharmacovigilance tasks like adverse event classification or signal prioritization, is distributed to all participants.

Each organization then performs local training using its proprietary adverse event databases, electronic health records, or spontaneous reporting systems. Training occurs entirely within their secure infrastructure. Once local training completes for a given round, only the model updates are encrypted and transmitted to a central aggregation server.

The aggregation server, which may be operated by a neutral third party or consortium, combines these encrypted updates using algorithms like Federated Averaging (FedAvg). This process creates an improved global model without the server ever accessing individual updates in plaintext. Advanced implementations incorporate differential privacy, adding calibrated noise to updates before aggregation to mathematically guarantee that no individual patient record can be reconstructed from the shared parameters.

Secure multi-party computation further enhances privacy. Participants can jointly compute aggregate statistics—such as the prevalence of a specific adverse event across all datasets—without any single party learning the contribution from others. Homomorphic encryption allows computations on encrypted data, ensuring that even the aggregation server cannot extract sensitive information.

Insight: The success of federated learning in pharmacovigilance depends as much on governance frameworks as technical protocols. Establishing clear data usage policies, audit mechanisms, and equitable benefit-sharing arrangements is essential for sustained collaboration.

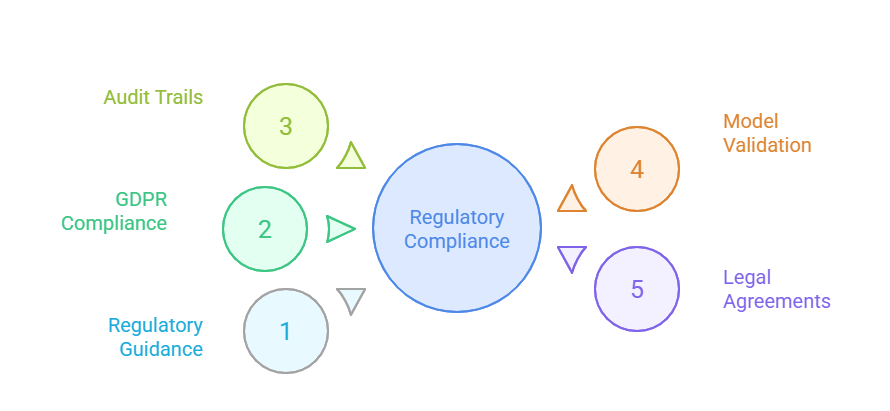

Implementing federated learning in pharmacovigilance requires navigating complex regulatory landscapes. While the approach inherently addresses privacy concerns, regulatory bodies are still developing specific guidance for collaborative AI systems.

The FDA’s guidance on artificial intelligence and machine learning in drug and biological products acknowledges the potential for collaborative model development but emphasizes the need for validation, transparency, and accountability. Federated learning systems must demonstrate that models trained across distributed datasets meet the same performance and reliability standards as those trained on centralized data.

European Medicines Agency (EMA) regulations under GDPR provide stronger support for federated approaches. Since personal data never leaves the originating organization, many data transfer and processing restrictions are circumvented. However, organizations must still document how model updates might indirectly reveal information about their datasets and implement appropriate safeguards.

Audit trails become particularly critical. Regulatory submissions relying on federated learning models must provide transparent documentation of all participating organizations, data sources, model versions, and aggregation methodologies. Reproducibility requirements may necessitate archiving model checkpoints and update histories throughout the training process.

Validation strategies must also evolve. Traditional model validation assumes access to the complete training dataset. In federated settings, validation requires distributed protocols where each participant validates on their local data, with results aggregated to demonstrate overall model performance across the collaborative network.

Federated learning is poised to fundamentally reshape how the pharmaceutical industry approaches drug safety monitoring. Several emerging trends indicate the trajectory of adoption and innovation.

Consortium models are gaining traction. Organizations like the Observational Health Data Sciences and Informatics (OHDSI) collaborative are exploring federated architectures for multi-institutional research. As trust in the technology grows, we can expect industry-wide initiatives where competing pharmaceutical companies contribute to shared safety surveillance systems while maintaining competitive separation.

Integration with real-world evidence platforms will accelerate. Electronic health record systems, claims databases, and wearable device data streams represent vast, underutilized resources for pharmacovigilance. Federated learning enables these data sources to contribute to safety monitoring without the logistical impossibility of centralizing diverse, sensitive datasets.

Regulatory submissions incorporating federated learning evidence will become routine. As validation methodologies mature and regulatory precedents are established, safety dossiers will increasingly cite collaborative AI analyses. This shift could significantly reduce the time between adverse event occurrence and regulatory action.

Cross-border collaboration will expand. Federated learning naturally accommodates data localization requirements, allowing participation from jurisdictions with strict data export restrictions. This enables truly global safety monitoring systems that respect national sovereignty over health data.

The technology will also drive innovation in related areas. Privacy-preserving record linkage techniques will enable federated learning across datasets that don’t share common patient identifiers. Federated transfer learning will allow knowledge gained from one therapeutic area to enhance models in related domains, multiplying the value of collaborative research.

Pro Tip: Organizations should begin building internal capabilities now. Understanding federated learning architectures, training data science teams, and establishing legal frameworks for participation will provide competitive advantages as industry standards emerge.

Federated learning represents a transformative approach to pharmacovigilance, resolving the longstanding tension between collaborative intelligence and data privacy. By enabling organizations to jointly train AI models without sharing sensitive patient information, this technology unlocks the full potential of distributed healthcare data for drug safety monitoring.

The implications extend beyond technical innovation. Federated learning facilitates a cultural shift toward collaborative research while respecting competitive boundaries. It provides a practical pathway for pharmaceutical companies, healthcare systems, and regulatory agencies to work together on patient safety initiatives without complex data sharing agreements or privacy compromises.

As regulatory frameworks mature and successful implementations demonstrate value, federated learning will transition from experimental technology to standard practice. Organizations that invest in understanding these systems, building appropriate technical infrastructure, and establishing collaborative partnerships will be best positioned to lead in the next generation of pharmacovigilance.